research

sparse matrix algorithms and software

The large matrices that arise in real-world problems in science, engineering, and mathematics tend to be mostly zero, or sparse. Sparse matrix algorithms lie in the intersection of graph theory and numerical linear algebra. A graph represents the connections between variables in the mathematical model, such as the voltage across a circuit component, a link from one web page to another, the physical forces between two points in a mechanical structure, and so on, depending on the problem at hand. The numerical linear algebra arises because these matrices represent systems of equations whose solution tells us something about how the real-world problem behaves. Google’s page rank algorithm, for example, requires the computation of an eigenvector for a matrix with as many rows and columns as there are pages on the web.

My research spans the spectrum of theory, algorithms, and software development in the area of sparse matrix and graph algorithms. I’m not just interested in creating new methods and software prototypes to demonstrate those methods. I pursue the code further to produce better-than-commercial-quality software that embodies these new methods. Nine of my codes appear as Collected Algorithms of the ACM, where they undergo rigorous peer-review testing for their research contributions and software quality.

I just love to delve into the theory and algorithms of graph theoretic and applied mathematical algorithms, but I don’t stop there. I just love to code. I collaborate with others with the same passion -- and I hope to instill the same drive in my students (graduate and undergraduate).

NASA once performed a software engineering exercise in which they used the most extreme techniques to produce the highest quality code they could create. With a fault rate of 0.1 KLOC, they concluded that such reliability is achievable but not practical. The MathWorks utilizes about 120K lines of my codes in MATLAB: x=A\b when A is sparse, sparse factorization methods (LU, QR, and Cholesky), sparse matrix multiplication, Dulmage-Mendelsohn decomposition, and fill-reducing orderings. If printed out, 120K lines of code would equal about 2 reams of paper, front and back. With only 3 bugs reported in my codes used in MATLAB in 15 years, this reliability metric out-performs NASA’s best-achievable result by a factor of 3.

My codes are also fast (in both senses of the word: asymptotically and in practice). For example, the time to factorize a benchmark matrix from the European Space Operations Center (for tracking orbital debris) dropped from 11 days to 7 minutes when my QR factorization method was added to MATLAB.

FUTURE VISION

The coming decades of high-performance computational mathematics will be increasingly dominated by heterogeneous computing based on hybrid multiprocessors with of a mix of conventional CPU cores and high-throughput GPU cores. This trend is driven by power constraints and the need for ever-increasing performance. Although these new heterogeneous systems can deliver high performance with low energy requirements, new algorithms must be developed to exploit them. The most challenging problems for these systems require a mix of regular and irregular computation. My research into direct methods for sparse linear systems lies within this problem domain and is also a critical component for many applications in computational mathematics.

My experience makes me well-poised for future research in GPU-based algorithms. As a world leader in algorithmic research for sparse matrix computations, my work combines graph-theoretic methods and numerical techniques to create algorithms for solving problems in computational science that arise across a wide range of applications. I incorporate my novel theory and algorithms into robust library-quality open-source software, which is widely used in industry, academia, and government labs. In the past decade, I have published more software in the ACM Transactions on Mathematical Software than any other author.

A primary thrust for my current and future work focuses on creating parallel algorithms for sparse multifrontal LU, QR, and Cholesky factorization for hybrid multicore CPU/GPU multiprocessors. The workflow in these algorithms is an irregular tree, where the nodes are the bulk of the work (regular operations on dense frontal matrices of varying sizes), and the edges are the irregular assembly of data from child to parent. The GPUs operate on many frontal matrices at a time, and data is assembled from child to parent frontal matrix without moving it to the CPU. No other sparse direct method employs these strategies for GPU computing. By creating widely-used high-performance algorithms and software that encapsulate these ideas, this research will extend the capability of heterogeneous computing to a wide domain of applications in computational science and mathematics.

Below is a talk that summarizes much of my research. Click here for the slides.

Google relies my code in Ceres, their nonlinear solver. They use this to place every photo in its correct position for Google Street View, to create Google Photo Tours, and for 3D imagery in Google Earth. Below are some samples of Ceres (and thus SuiteSparse) at work. Without SuiteSparse, Ceres wouldn’t be able to do its job, and Google Street View would be a lesser experience as a result.

CURRENT SPONSORS

SuiteSparse ON linux or Mac

APPLICATIONS

My software is so widely used that I can’t keep track of its usage. You can get a glimpse of this yourself via simple Google searches for some of the unique names of my solvers, for example: COLAMD, SuiteSparse, UMFPACK, and CHOLMOD.

Some of the specific uses of my solvers in industry, government labs, and academia are listed below. This is a partial list since I don’t require registration of any kind for you to use my codes. If you are using my codes and would like a link to appear below, just drop me a line.

companies / commercial software

The MathWorks (MATLAB): general toolbox for scientific computation

Wolfram Research (Mathematica; also here or here):

general toolbox for scientific computation

Google Ceres (used by Street View): nonlinear least squares solver

Facebook: Oculus

Cadence Design Systems (OrCAD and Allegro): circuit simulation

IBM: circuit simulation

ANSYS: finite-element method

Apple

Berkeley Design Automation (their "fast sparse-matrix solver" is KLU):

circuit simulation

Geomodeling Solutions: geological modeling

MSC Software (NASTRAN): finite-element method

Orcina: structural modeling

ATopTech: circuit simulation

Tandent Vision: computer vision

Vision Map: computer vision

EnerNex: energy simulation

FEAT: finite-element method

Freescale: semiconductor simulation

Geograf: geophysical modeling

HRL Laboratories: circuit simulation

Intex: financial simulation

Lumerical: circuit simulation

Mentor Graphics: circuit simulation

SIMetrix: circuit simulation

COMSOL (FEMLAB): finite-element method

NVIDIA (CULA sparse): sparse matrix library for GPUs

WRSpice: circuit simulation

AgiSoft: PhotoScan - photogrammetric processing of images into 3D spatial data

Embotech: ECOS embedded conic solver

Government labs

HSL Mathematical Subroutine Library: general library for computational science

Xyce by Sandia National Labs: circuit simulation

Knolls Atomic Power Lab: nuclear reactor design

PETsc by Argonne National Lab: general toolbox for computational science

Amesos and Trilinos by Sandia National Labs:

general toolbox for computational science

FiPy by NIST: finite-element method

ISIS by USGS: astrogeology image processing (Moon, Mars, Mercury, ...)

ARKcode: ODE solver, part of SUNDIALS, by Lawrence Livermore National Lab

academia / open source

Google Ceres (used by Street View): nonlinear least squares solver

GEGL (used in Gimp): graphics/image processing

Julia: programming language with built-in support for sparse matrices

SuperLU: sparse matrix library

MUMPS: sparse matrix library

Fedora Linux: general math library distribution for Linux

Debian Linux: ditto

Arch Linux: ditto

Ubuntu Linux: ditto

OpenSUSE Linux: ditto

Scientific Linux: ditto

GNU Darwin: general math library distribution for MacOS X

DarwinPorts: ditto

Homebrew: ditto

Fink: ditto

Octave: general toolbox for computational science

R: statistical package

ROS: robot operating system

deal.II: finite-element method / differential equations library

scilab: general toolbox for computational science

CVX: convex optimization

CVXPY: Python package for convex optimization

SDPT3: semidefinite quadratic linear programming

CVXOPT: convex optimization

MBDyn: multi-body dynamics

Boost: C++ libraries

OpenSees: earthquake simulation

umfpackpy: Python bindings for UMFPACK

CGAL: computational geometry algorithms library

Kraken: Cray XT5 installation

FEniCS Project: differential equations

Eigen: general toolbox for computational science

Sage: Python-based mathematics software system

SciPy: Python-based software for mathematics, science, and engineering

NumPy: fundamental matrix computations for Python (see also SciPy).

SciPy.sparse: sparse matrix library for Python

Pysparse: sparse matrix library for Python

NLPy: nonlinear programming in Python

SfePy: finite-element method in Python

FreeFem++: finite-element method

Elmer: finite-element method

FLOODS/FLOOPS: semiconductor device/process modeling

MILAMIN: finite-element method

Minimum Sobolev Norm-based Methods for Elliptic PDEs, to appear.

ILUPACK: ILU preconditioning package.

JADAMILU: sparse eigenvector package.

Cubica: non-linear finite-element package.

LAMG: algebraic multigrid solver

LiveV: finite-element method

M.E.S.S: sparse solvers, model order reduction, optimal control.

AMDisS: finite-element toolbox

PDCO: primal-dual interior method for convex optimization

MLD2P4: parallel algebraic multi-level preconditioners

FEATFLOW: finite-element method

FEAST: finite-element method

OpenSLAM: robotics projects; 10 of 24 use sparse solvers (all in SuiteSparse):

g2o: optimization of graph-based nonlinear error functions. Their use of

CHOLMOD is notable because they rely on our optimal sparse Cholesky

update/downdate methods. Other packages: 2D-I-SLSJF, HOG-Man,

RobotVision, SLAM6D, SSA2D, MTK, SLOM, iSAM, TJTF

LSD-SLAM: large-scale direct monocular SLAM

SSBA: sparse bundle adjustment

libdogleg: large-scale nonlinear least-squares optimization

CUSPICE, a CUDA-accelerated NGSPICE: circuit simulation

A splitting method for optimal control

hpFEM/Hermes: finite-element toolbox

PATH solver: optimization package for mixed complementarity problems

MESA: stellar evolution

DSM2: California Bay Delta Simulation Model II

Caphe: optical circuit simulation

GLPK: GNU Linear Programming Kit

libdogleg: general purpose sparse optimizer to solve data fitting problems

iSAM: incremental Smoothing and Mapping for the SLAM (robotics) problem

also iSAM2.

GTSAM: the SLAM problem for autonomous flying vehicles

DOLFIN: automated finite element computing

OPM: Open Porous Media Project

MFEM: finite element solver

the SUITEsparse matrix collection

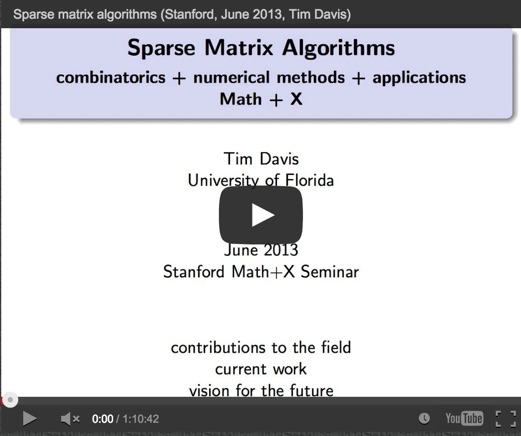

I also collect matrices from real applications (like those above) and make them available as benchmarks to researchers in sparse matrix algorithms and graph / network algorithms. They are incredibly useful because randomly generated matrices tend not to capture the structure of matrices that arise in practice. If you develop a method and test it with random problems, it will likely behave very differently when used in practice, on real problems.

The collection is now located at https://sparse.tamu.edu.

To maintain this collection, I collaborate with Yifan Hu at Yahoo! labs, who develops visualization techniques. These matrices are not only scientifically useful, they are beautiful as well. Below is a small sample (click here for the full collection).

COLLABORATORS

Iain Duff

John Gilbert

Esmond Ng

Patrick Amestoy

Bill Hager

Ekanathan Natarajan Palamadai

Stefan Larimore

Siva Rajamanickam

Yanqing Chen

Les Foster

Sanjay Ranka

Nuri Yeralan

Sharanyan Chetlur

Anil Rao

Begum Senses

Yifan Hu

Steve Rennich

Wissam Sid-Lakhdar

major users

SIAM plenary talk at the 2006 SIAM Annual Meeting. This talk gives an accessible introduction to my research, and serves as an overview of my graduate-level course on sparse matrix algorithms. Click here for the slides.