Home

Documents

Projects

Links

Gallery

Contact

|

|

Gallery of movie demos

from (some) previous projects

Gallery of pictures

from (some) previous projects

|

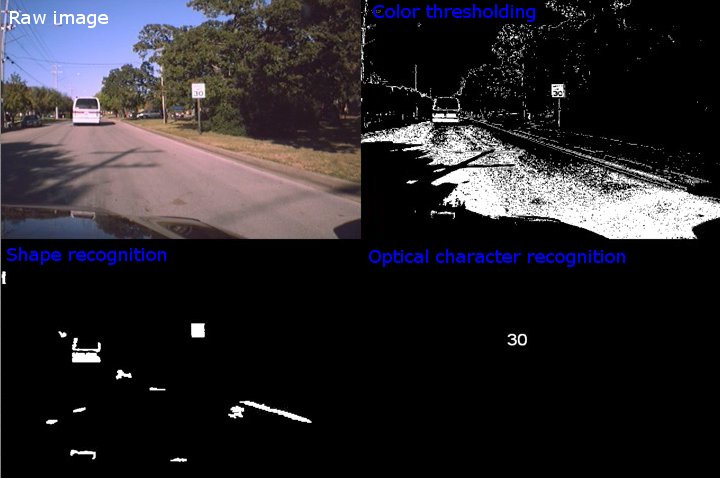

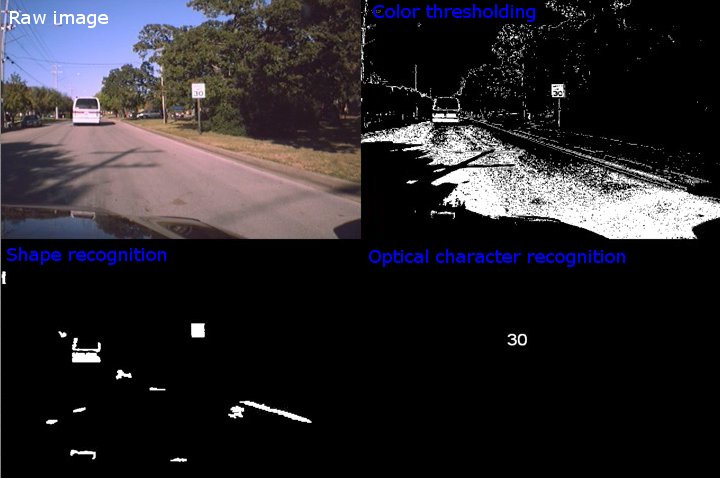

NI

Speed Aware.

The goal of this project was to warn drivers when they exceeded

the posted speed limit. The system consisted of a Compact Vision

System (donated by National Instruments), a webcam, and a GPS.

The system employed computer vision techniques to detect speed

limit signs, and optical character recognition to read the speed

limit within. It would then compare this limit with the vehicle's

velocity, measured with a GPS unit, and warn the driver if s/he

exceeded the speed limit. The system was tested

successfully on the road (!). |

|

|

|

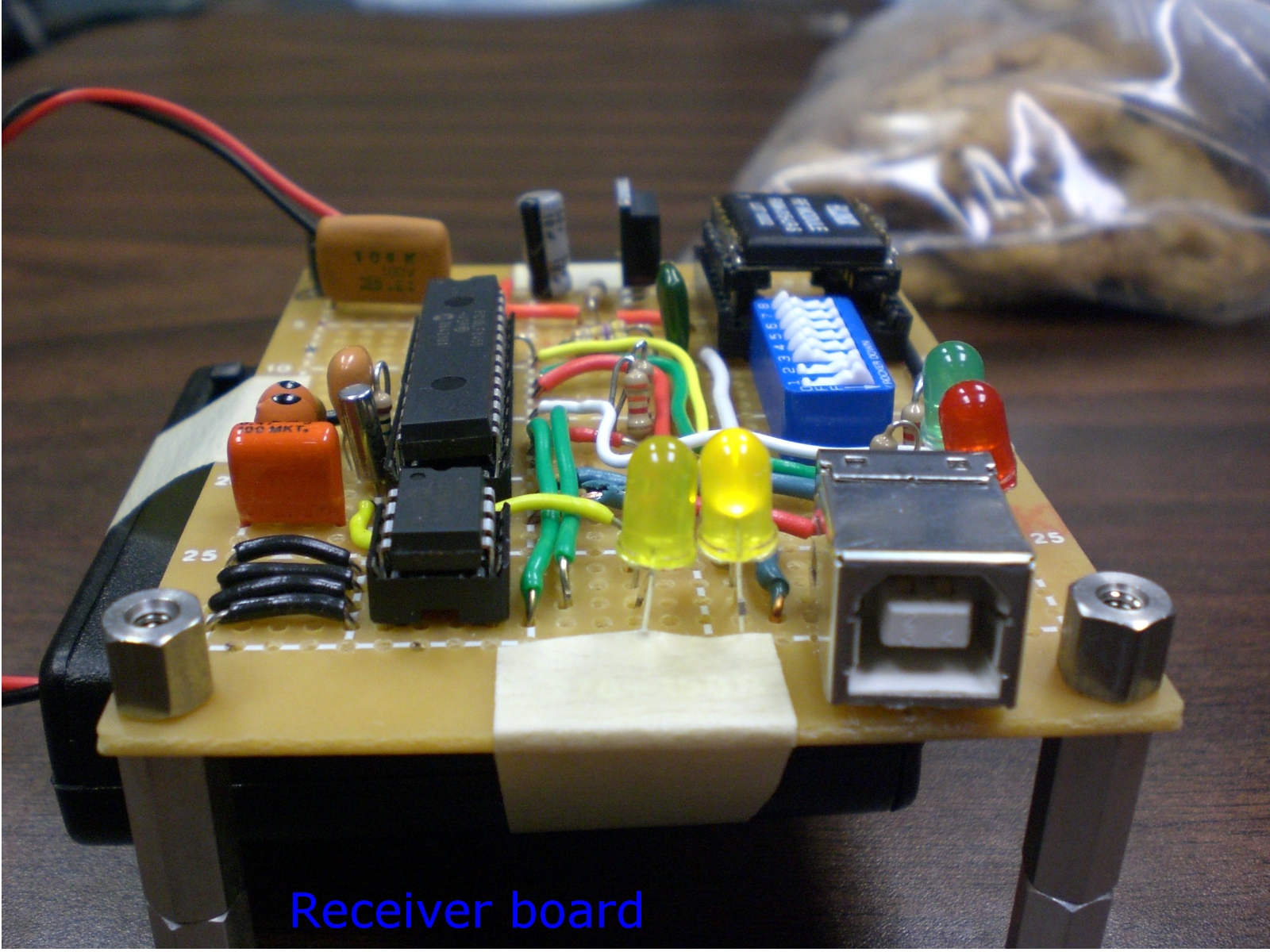

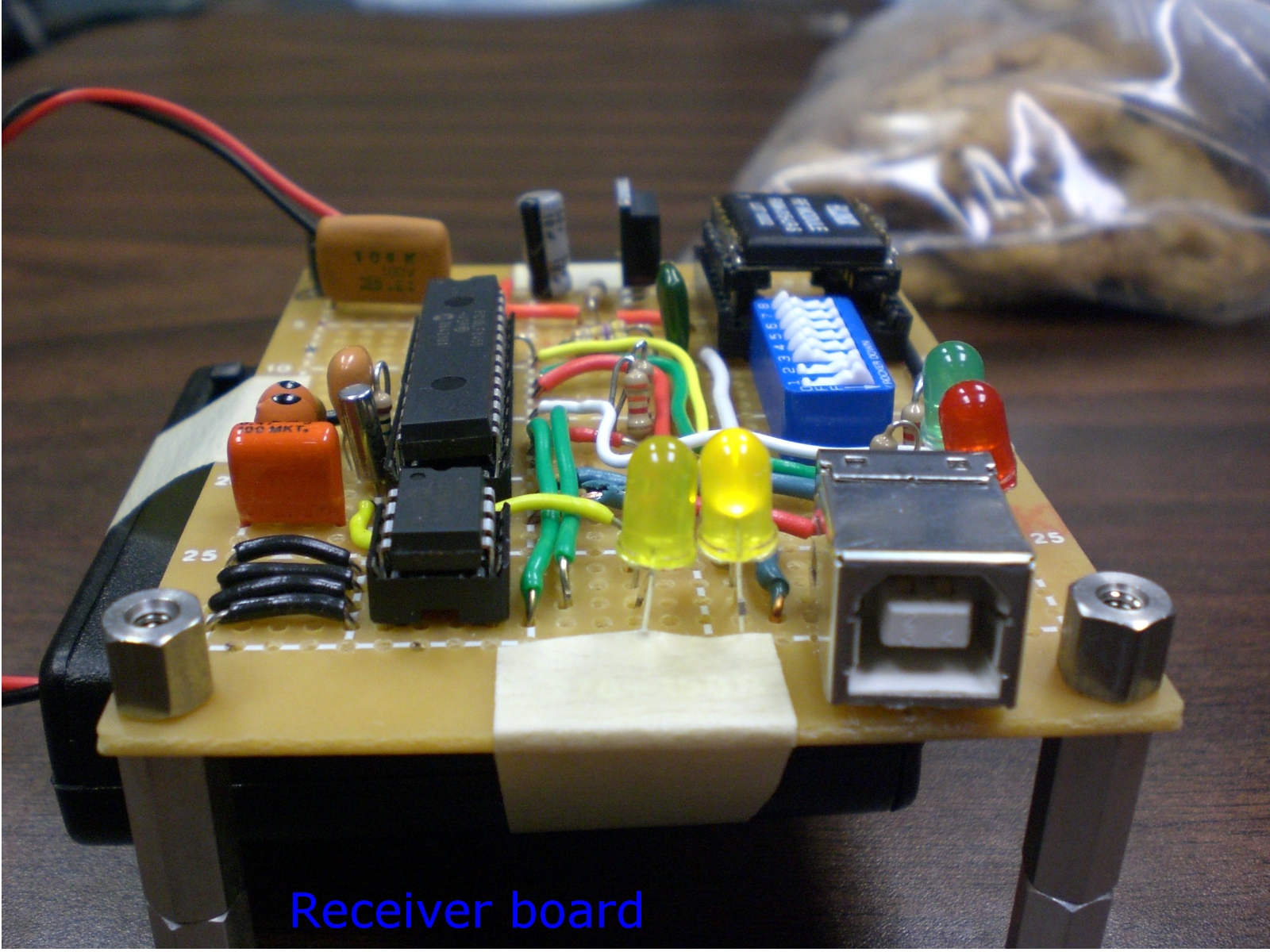

Pet

deterrent.

The goal of this project was to develop a system that would

restrict access for pets in a home. The system consisted of

a series of RF transmitters, placed at certain locations in

the home, and an RF receiver, which was worn by the pet. The

transmitter would send a unique code at specified intervals.

The receiver would compare the transmitter ID with a lookup

table of allowed and disallowed sites; if disallowed, the receiver

would send a deterrent signal to the pet (not implemented).

The receiver

also tracks the time stamps of the different transmitters detected

throughout the day, and upload them to a PC client application

through USB.

|

|

|

|

|

|

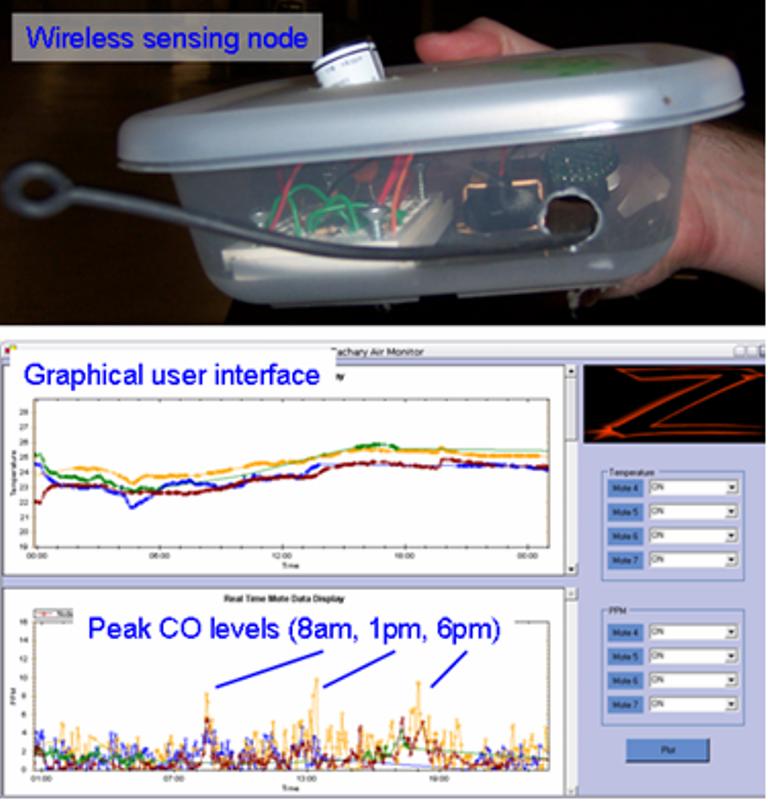

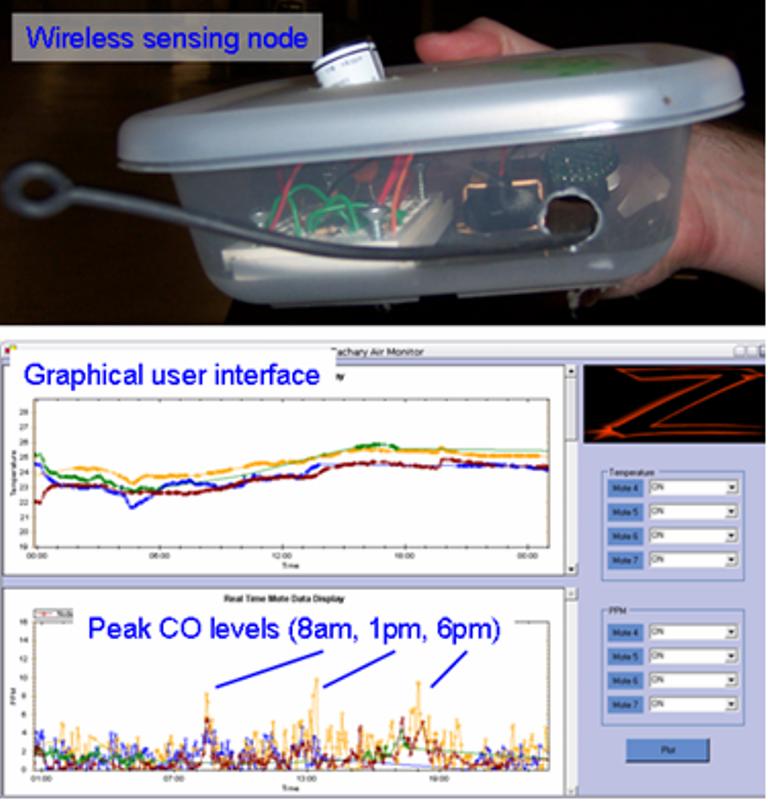

Air quality monitoring

with a network of chemical sensors. The goal of this project was

to develop a sensor network for monitoring carbon-monoxide (CO)

emissions inside campus buildings. The system consisted of an array

of Crossbow® motes, each mote housing an electrolytic CO sensor.

The network was able to detect CO concentrations in the low parts-per-million

range, and provide 24/7 on-line data visualization through the

campus network.. |

|

|

|

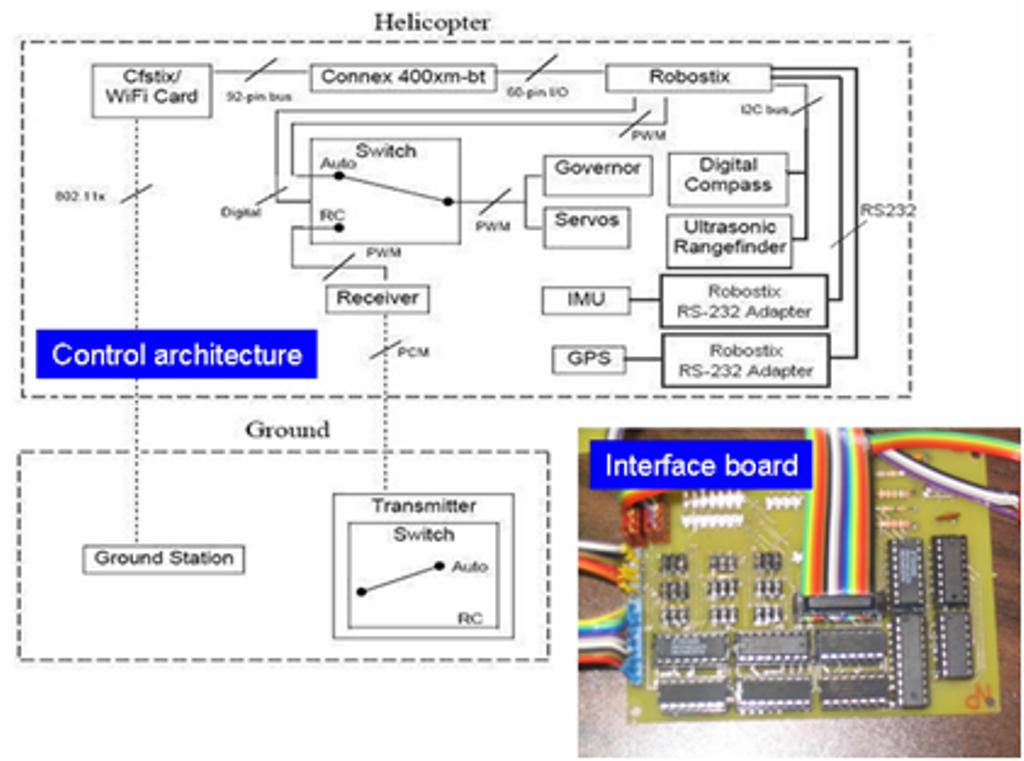

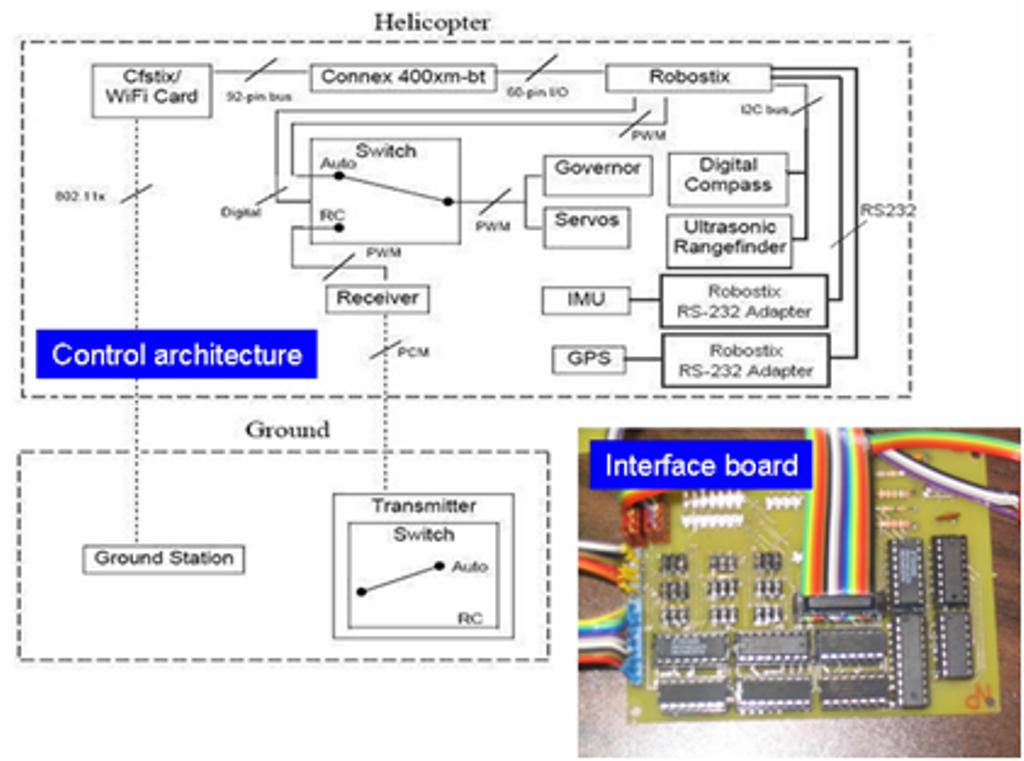

Fly-by-wire control for a robotic helicopter.

The objective of this project was to develop an autonomous controller

for a small helicopter vehicle, along with a fast switch to allow

the user to regain manual control when needed. The students designed

a microcontroller system, also based on the Gumstix/Robostix platform,

which included pulse-width-modulated controls for five servo motors,

and inputs from an Inertial Measurement Unit (IMU), a Global Positioning

System (GPS), an ultrasonic rangefinder, and a digital compass.

The final product was turned over to Professor Dez Song in our

department, who will use it on his robotic helicopter..

|

|

|

|

|

|

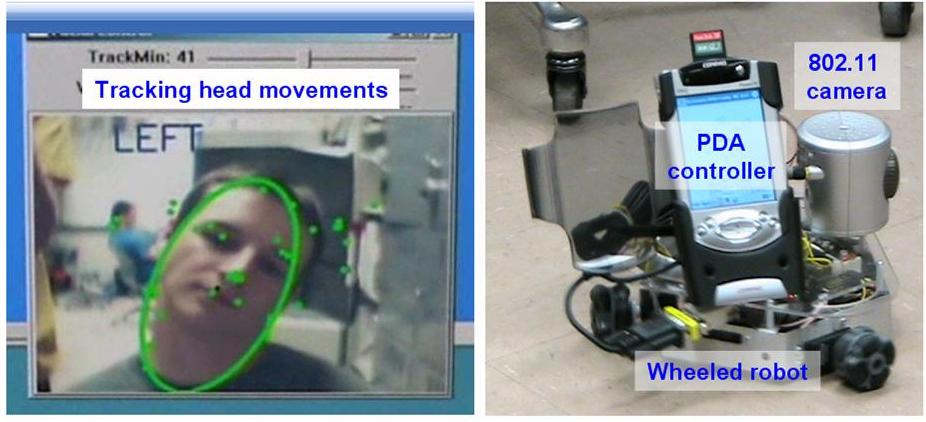

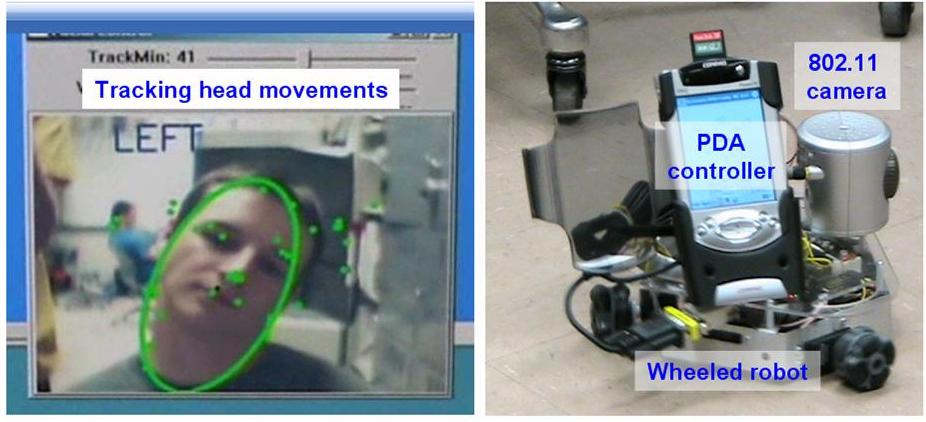

Visual servoing.

The goal of this project was to develop a human-robot interface

that would allow a user to explore a remote environment with a

mobile robot. The system consisted of a small wheeled robot with

a wireles camera and a wireless PDA, and a workstation with a webcam.

The user would receive live feed from the robot camera, and would

control the robot movements by making prespecified head movements

(see figure) in front of the webcam. Right/left head

shakes would command the robot to make right/left turns, up/down

head nods would command the robot to move forward or stop. A watchdog

safety thread ran on the robot's PDA to stop the robot if communications

with the user were disrupted. |

|

|

|

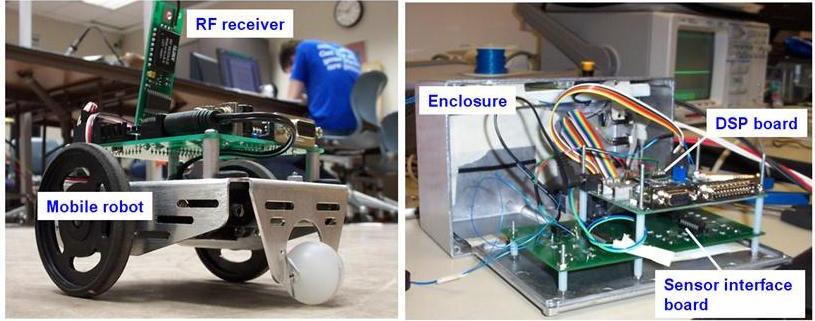

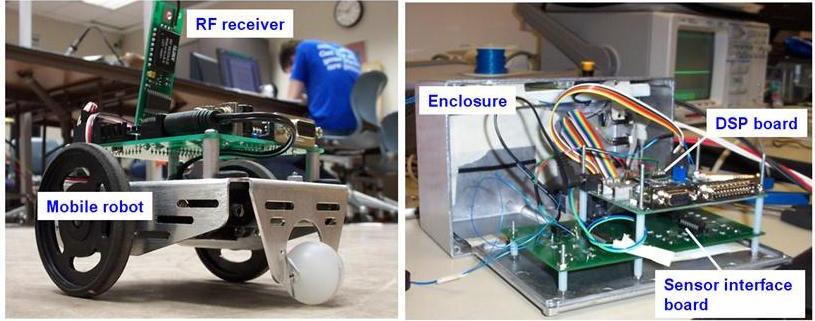

Robot

control with EMG signals.

The goal of this project was to develop a human-robot interface

based on physiological signals. The system consisted of an

array of electromyogram (EMG) sensors, which detected electrical

activity in different muscles of the user, a DSP board that

performed pattern recognition on the EMG signals, and a small

mobile robot controlled via RF. The user would train the pattern

classifier on a subset of hand gestures which, when expressed

at a later time, would serve as individual commands for the

robot.

|

|

|

|

|

|

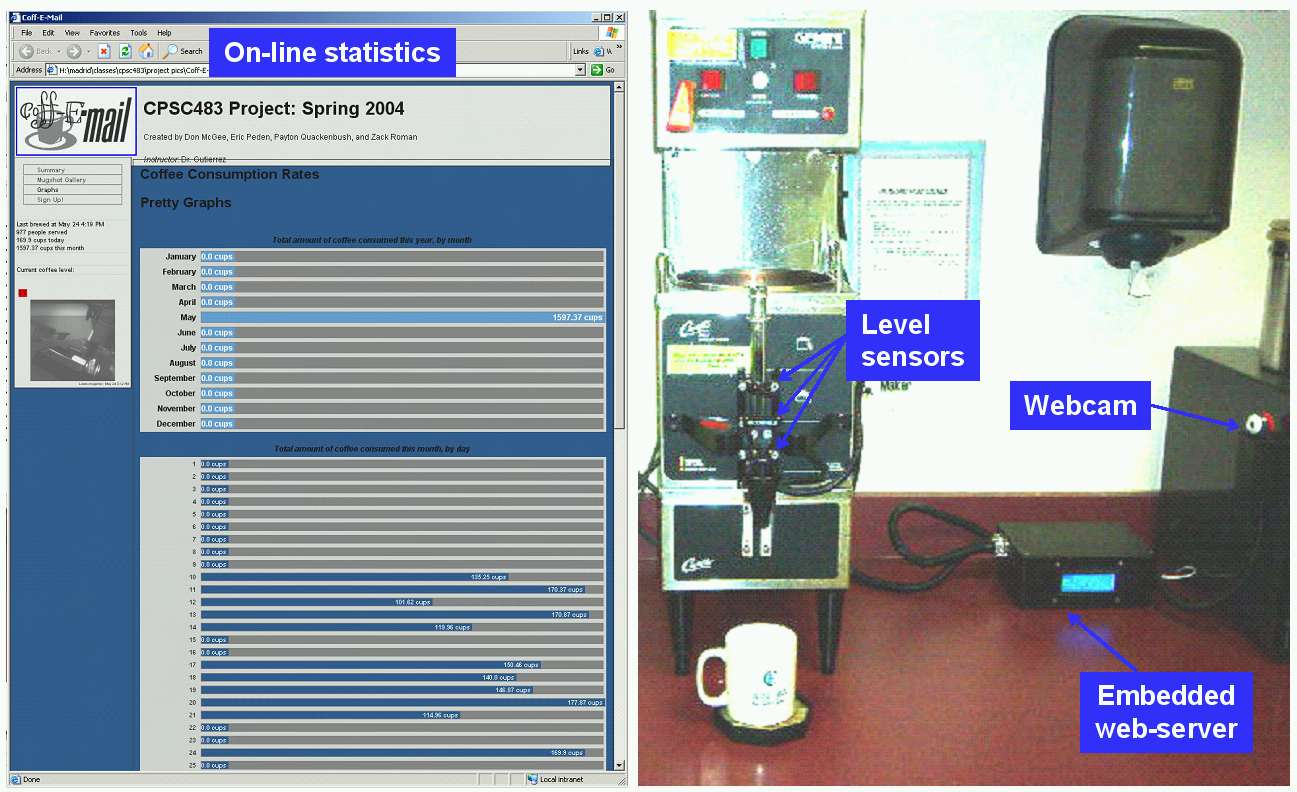

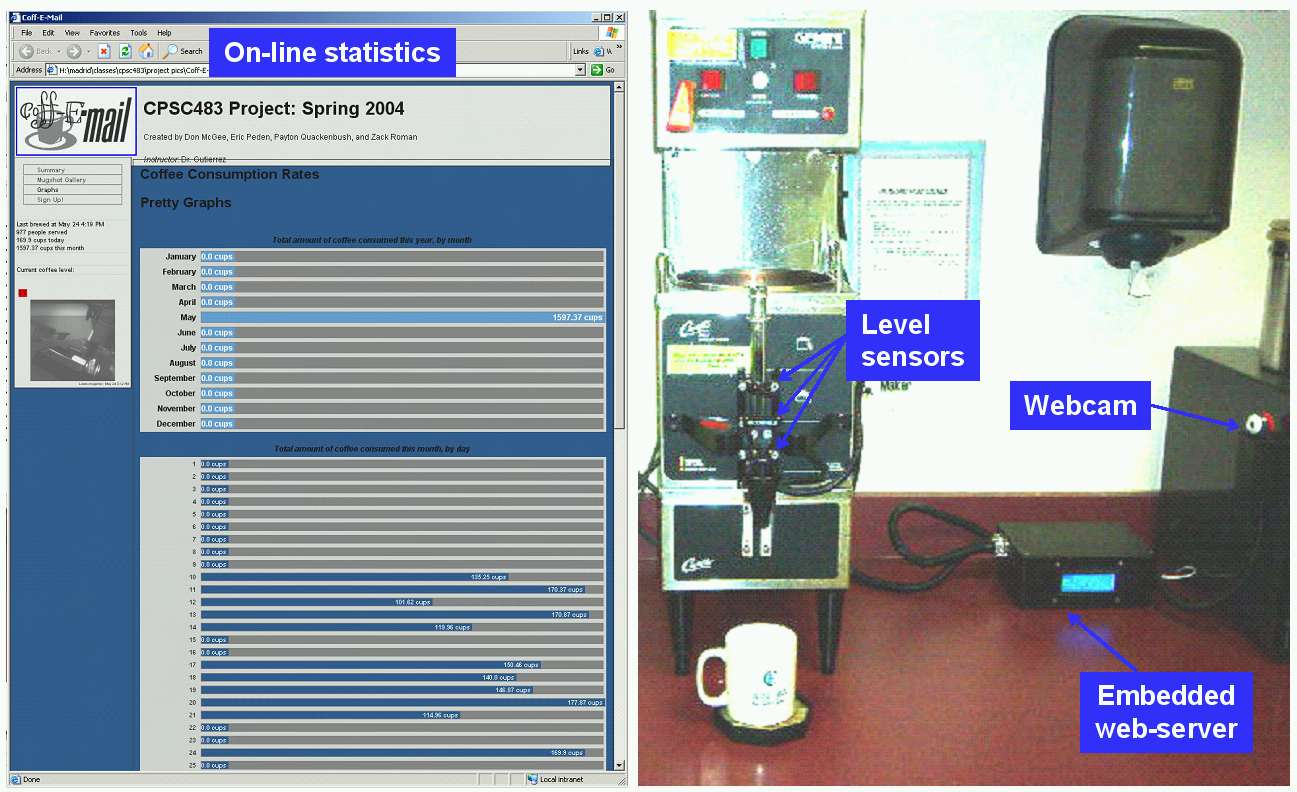

Coff-e-mail.

The goal of this project was to develop a low-cost embedded web-server

that would keep logs of coffee consumption in the department’s

student lounge. The system contained photocells for measuring current

levels of coffee, motion sensors for detecting when coffee was

being poured, and a low-cost camera to capture an image of the

coffee mug. The system kept statistics on-line and also informed

registered users when a fresh coffee pot was being brewed. |

|

|

|

Motion tracking

with infra-red imaging. The goal of this

project was to develop a motion capture system based on IR imaging.

The students designed a head-mounted frame to allow recovery of 3D

head rotations (roll,

pitch and yaw), developed a graphical user interface, automated

a number of otherwise time-consuming tasks (e.g., initial detection

of markers in the face), and integrated the audio-visual capture

system with an MPEG-4 compliant facial animation engine. |

|

|

|

|

|

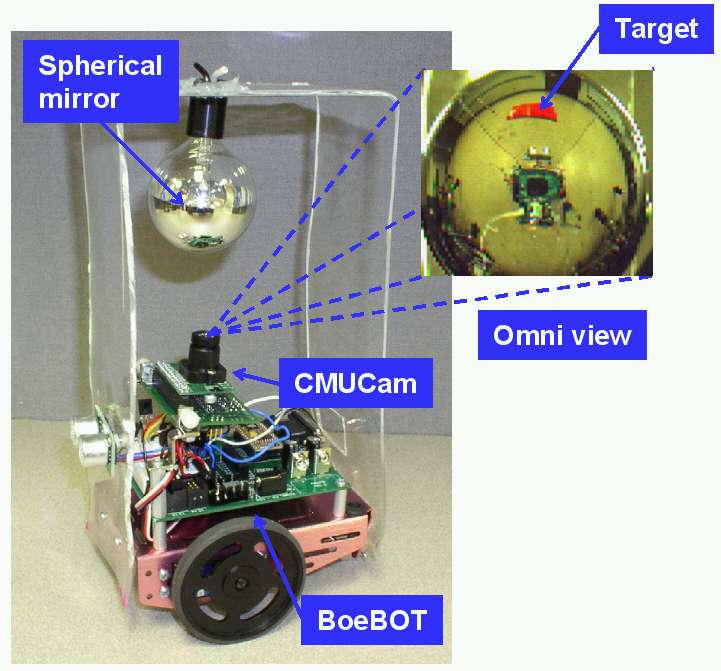

Omni-Directional

Vision System for Mobile Robots.

The objective of this project was to design and integrate an omni-directional

vision system (ODVS) for a mobile robot. The ODVS consisted of a

CCD array (based on the CMUCam) coupled with a spherical mirror (a

chromed light bulb), that generated a 360-degree view of the surroundings

of the robot. The students were able to integrate the ODVS with a

miniature mobile robot, and track a color moving target in real-time. |

|

|

|

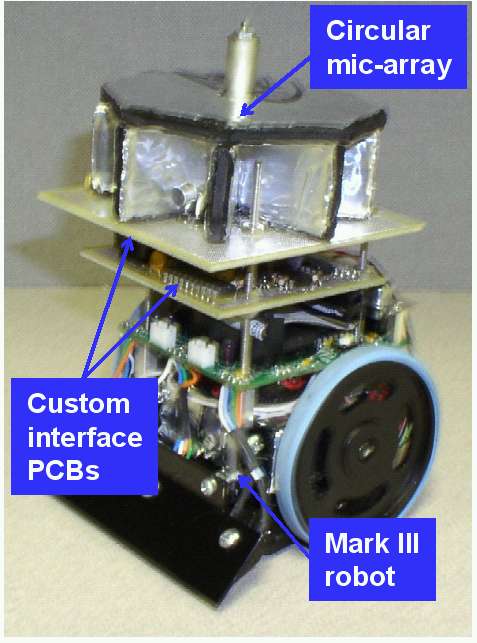

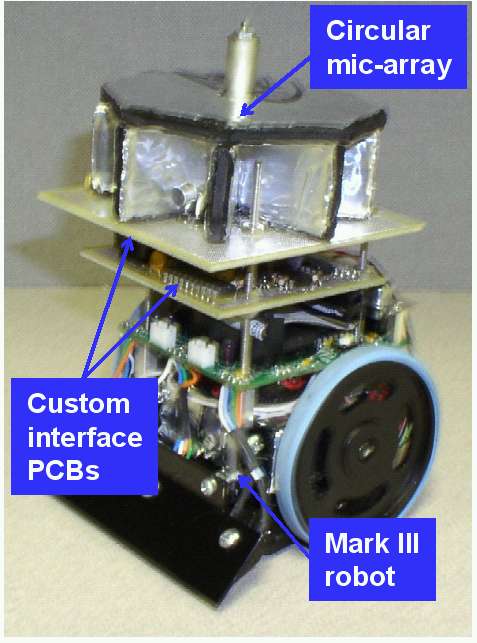

Acoustic

Navigation for Mobile Robots. The goal of this project

was to develop a microphone array to allow a miniature mobile

robot to detect acoustic beacons. The array consisted of eight

miniature microphones in a ring configuration to provide 360

degree sound localization. The students designed a custom printed

circuit interface board for the microphones, which also contained

a programmable filter bank that allowed the robot to “listen” to

eight different center frequencies. |

|

|

|

|

|

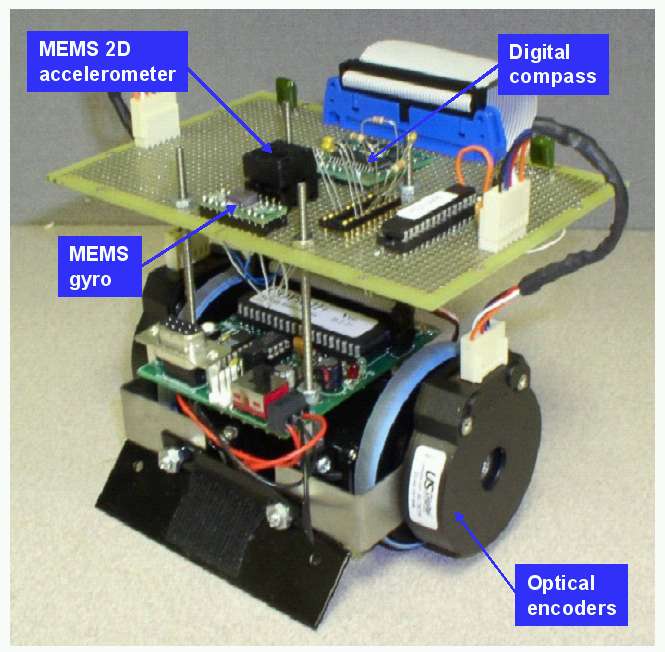

Dead-Reckoning

System for Mobile Robots. The objective

of this project was to design a dead-reckoning system for mobile

robots based on odometry, inertial navigation and a digital compass.

Odometry was achieved with optical encoders attached to the wheel

axles of a miniature robot. The inertial navigation consisted of

a 2-axis MEMS accelerometer and a MEMS gyroscope, which allowed the

robot to measure linear and angular accelerations. These two dead-reckoning

modalities were complemented with information from a digital compass.

The students also investigated sensor fusion strategies to combine

information from all these sensors. |

|

|