Talks hosted by the Sketch Recognition Lab

Lab Director: Dr. Tracy Hammond| Reinforcement Learning for Patient-Specific Propofol Anesthesia: A Human Volunteer Study Abstract: Classical control methods enjoy widespread industrial application due to their simplicity in design and implementation, as well as their success in regulating a wide range of systems. However, some processes may not lend themselves to these conventional control methods. Such processes may involve highly-dimensional inputs, non-negligible nonlinearities in transfer functions, nonstationary time parameters, and general uncertainty. Currently, more robust methods, such as fuzzy logic, nonlinear control, and optimal control theories, may be applied to these tasks; however, these methods entail increased complexity in design and implementation. As a result, alternative control methods have been studied; for example, recent advances in intelligent control techniques have successfully addressed some of the challenges faced in "real-world" control tasks. Most notably, neural networks and evolutionary computing have been applied to difficult industrial processes with good results. In medicine, it has been shown that neuro-fuzzy techniques, in addition to classical control methods, demonstrate clinical relevance in their respective abilities to regulate complex physiological processes. Reinforcement learning (RL), another intelligent control method, has demonstrated proficiency in difficult control problems but has no reported application to clinical control tasks. To evaluate the suitability of reinforcement learning in a life-critical control task, a human volunteer study of RL-controlled, closed-loop intravenous sedation was performed in the Stanford School of Medicine Department of Anesthesia. Under IRB-approved study, 15 healthy volunteers underwent propofol anesthesia at levels of sedation routinely associated with general anesthesia. Over the course of each 90-minute study, an RL control system achieved induction and maintenance of propofol-induced hypnosis at two different target levels of anesthesia as measured by BIS, an EEG-based indicator of propofol effect. The RL control system demonstrated clinically-suitable performance in the maintenance of propofol-induced hypnosis, surpassing all comparable performance metrics published in the anesthesia literature. In addition, the agent achieved generalized control that compensated for varying degrees of intra- and inter-subject variation in propofol effect during maintenance, suggesting that RL control may be applied to diverse populations. Finally, the dynamic conditions of induction and target change were well-controlled. Collectively, these results demonstrate the clinical efficacy of reinforcement learning and suggest that RL control can be used to improve patient care. Bio:Dr. Brett L. Moore received his Ph.D. in Computer Science from Texas Tech University in 2010 and holds a Master's degree in Computer Science, in addition to undergraduate degrees in Computer Science and Technical Communication. Dr. Moore's graduate research was twice recognized with scholarships from the ARCS Foundation, and his doctoral dissertation was recognized as outstanding by the Texas Tech University graduate school. During his graduate career, Dr. Moore taught undergraduate computer science courses including C++, data structures, algorithms, and systems programming at Texas Tech University and Texas State University - San Marcos. He is an active member of the IEEE Central Texas Chapter in Engineering in Medicine and Biology. Dr. Moore is currently employed as a Senior Product Development Engineer in Research and Development at KCI, a San Antonio, TX, medical device manufacturer. In the Embedded Systems Group at KCI, he has served in lead software roles supporting the development of new wound-healing systems, as well as the evaluation of emerging critical-care technologies. Prior to KCI, Dr. Moore has enjoyed a 15-year career in medical device research and development, which included the design and development of various critical-care technologies for maintenance of patient normothermia and patient-specific anesthesia. As a result of these research activities, Dr. Moore has published a number of peer-reviewed journal articles and has been invited to speak at international conferences in medicine, most notably the American Society of Anesthesiologists. His general research interests lie in solving complex problems in medicine with the application of intelligent systems techniques. Currently, one of Dr. Moore's intelligent systems, a system for patient-specific intravenous anesthesia, is being evaluated in a multi-center clinical trial between the Stanford University School of Medicine and University Medical Center Groningen (the Netherlands). | |

| Sketch Recognition with Multiscale Stochastic Models of Temporal Patterns Abstract: Sketching is a natural mode of interaction used in a variety of settings. For example,people sketch during early design and brainstorming sessions to guide the thought process;when we communicate certain ideas,we use sketching as an additional modality to convey ideas that can not be put in words. The emergence of hardware such as PDAs and Tablet PCs has enabled capturing freehand sketches,enabling the routine use of sketching as an additional human-computer interaction modality. But despite the availability of pen based information capture hardware,relatively little effort has been put into developing software capable of understanding and reasoning about sketches. To date,most approaches to sketch recognition have treated sketches as images (i.e.,static finished products) and have applied vision algorithms for recognition. However, unlike images, sketches are produced incrementally and interactively, one stroke at a time and their processing should take advantage of this. In this talk, I will describe ways of doing sketch recognition by extracting as much information as possible from temporal patterns that appear during sketching. I will present a sketch recognition framework based on hierarchical statistical models of temporal patterns. I will show that in certain domains,stroke orderings used in the course of drawing individual objects contain temporal patterns that can aid recognition. Build on this work,I illustrate how sketch recognition systems can use knowledge of both common stroke orderings and common object orderings. I will present a statistical framework based on Dynamic Bayesian Networks that can learn temporal models of object- level and stroke-level patterns for recognition. This framework supports multiobject strokes, multi-stroke objects,and allows interspersed drawing of objects – relaxing the assumption that objects are drawn one at a time. The system also supports real-valued feature representations using a numerically stable recognition algorithm. I will present recognition results for hand-drawn electronic circuit diagrams. The results show that modeling temporal patterns at multiple scales provides a significant increase in correct recognition rates,with no added computational penalties. Bio:Dr. Sezgin graduated summa cum laude with Honors from Syracuse University in 1999. He received his MS in 2001 and his PhD in 2006, both from Massachusetts Institute of Technology. He is currently a Postdoctoral Research Associate in the Rainbow group at the University of Cambridge Computer Laboratory. His research interests include intelligent human-computer interfaces,multimodal sensor fusion, and HCI applications of machine learning. | |

| Properties of Hand-Drawn Digital Logic Diagrams Abstract: Programs that can recognize students' hand-drawn diagrams have the potential to revolutionize education by breaking down the barriers between diagram creation and simulation. Much recent work (including much of our own work) focuses on building robust recognition engines, but researchers have paid surprisingly little attention to the potential users of these systems. In this talk I will discuss a study in which we examined freely-drawn digital logic diagrams created by students in an electrical engineering class. Our goal was to understand how to construct a recognition engine that is robust to the way students actually draw in practice. Our analysis reveals considerable drawing style variation between students and that standard drawing style restrictions made by sketch recognition systems to aid recognition generally do not match the way students draw. I will discuss the implications the results from this study have on the design of sketch recognition systems. Bio:Christine Alvarado is an assistant professor of computer science at Harvey Mudd College. Her primary research interests lie in the intersection of artificial intelligence and human-computer interaction. She focuses on building robust,free-sketch recognition-based interfaces and exploring how to resolve the user interface challenges associated with these interfaces. In addition to her sketch understanding research, Prof.Alvarado is actively involved in outreach efforts to increase the number of women in computer science,and in designing novel introductory computer science curriculum that appeals to a broad scientific audience. Prof. Alvarado received her undergraduate degree in computer science from Dartmouth in 1998. She received her S.M.and Ph.D.in computer science from MIT in 2000 and 2004,respectively. | |

| Hypothesis Testing and Model Selection for Clustering Abstract: Clustering algorithms are widely used in data analysis, but they are difficult to apply when the number of appropriate clusters is unknown. This model selection problem is difficult to answer in unsupervised learning, since no training signal is available. In this talk I will introduce several methods of model selection for popular clustering algorithms such as K-means and Gaussian mixtures. The methods I'll present are primarily based on statistical hypothesis testing -- at local and global levels. The tests incorporate data projections (both deterministic and random) to simplify and speed the procedure. Bio:Greg Hamerly is an assistant professor of computer science at Baylor University. His work is in machine learning, especially in model selection in unsupervised learning and applications to computer architecture simulation. Prior to Baylor he was at KU Leuven (Belgium) for a year as a postdoc, after graduating from UCSD in 2003. | |

| Computational Study Of Nonverbal Social Communication Abstract: The goal of this emerging research field is to recognize, model and predict human nonverbal behavior in the context of interaction with virtual humans, robots and other human participants. At the core of this research field is the need for new computational models of human interaction emphasizing the multi-modal, multi-participant and multi-behavior aspects of human behavior. This multi-disciplinary research topic overlaps the fields of multi-modal interaction, social psychology, computer vision, machine learning and artificial intelligence, and has many applications in areas as diverse as medicine, robotics and education. During my talk, I will focus on three novel approaches to achieve efficient and robust nonverbal behavior modeling and recognition: (1) a new visual tracking framework (GAVAM) with automatic initialization and bounded drift which acquires online the view-based appearance of the object, (2) the use of latent-state models in discriminative sequence classification (Latent-Dynamic CRF) to capture the influence of unobservable factors on nonverbal behavior and (3) the integration of contextual information (specifically dialogue context) to improve nonverbal prediction and recognition. Bio:Dr. Louis-Philippe Morency is currently research professor at USC Institute for Creative Technologies where he leads the Nonverbal Behaviors Understanding project (ICT-NVREC). He received his Ph.D. from MIT Computer Science and Artificial Intelligence Laboratory in 2006. His main research interest is computational study of nonverbal social communication, a multi-disciplinary research topic that overlays the fields of multi-modal interaction, computer vision, machine learning, social psychology and artificial intelligence. He developed "Watson", a real-time library for nonverbal behavior recognition and which became the de-facto standard for adding perception to embodied agent interfaces. He received many awards for his work on nonverbal behavior computation including three best-paper awards in 2008 (at various IEEE and ACM conferences). He was recently selected by IEEE Intelligent Systems as one of the "Ten to Watch" for the future of AI research. | |

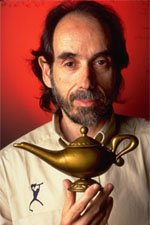

| Drawing on Sketchy Knowledge Abstract: Hand-drawn sketches are easy for people to produce, but lines aren't straight, endpoints don't meet, circles look squashed, and, if it's really bad, you can't tell what it is at all. But often, a really smart computer can figure it out anyway. Commonsense knowledge, expressed in natural language, is easy for people to state, but it's vague, context-dependent, inconsistent, and, if it's really bad, useless. But often, a really smart computer can figure it out anyway. Bio:Henry Lieberman has been a Research Scientist at the MIT Media Laboratory since 1987. His interests are in the intersection of artificial intelligence and the human interface. He directs the Software Agents group, which is concerned with making intelligent software that provides assistance to users in interactive interfaces. Many of his current projects revolve around applying Common Sense Reasoning to interactive interfaces. He is using a large knowledge base of Commonsense facts about everyday life to streamline interfaces, provide intelligent defaults, and proactive help. Application areas include predictive typing, multilingual communication, management of photo and media libraries, product recommendation and e-commerce tools. He has edited or co-edited three books, including End-User Development (Springer, 2006), Spinning the Semantic Web (MIT Press, 2004), and Your Wish is My Command: Programming by Example (Morgan Kaufmann, 2001). From 1987-1994 he worked with graphic designer Muriel Cooper on tools for visual thinking, and new graphic metaphors for information visualization and navigation. He holds a strong interest in making programming easier for non-expert users. He is a pioneer of the the technique of Programming by Example, where a user demonstrates examples, which are recorded and generalized using techniques from machine learning. He has also worked on reversible debuggers, 3D programming, and natural language programming. From 1972-87, he was a researcher at the MIT Artificial Intelligence Laboratory. He started with Seymour Papert in the group that originally developed the educational language Logo, and wrote the first bitmap and color graphics systems for Logo. He also worked with Carl Hewitt on actors, an early object-oriented, parallel language, and developed the notion of prototype object systems and the first real-time garbage collection algorithm. He holds a doctoral-equivalent degree (Habilitation) from the University of Paris VI and was a Visiting Professor there in 1989-90. | |

| Endpoint Prediction Using Motion Kinematics Abstract: Point-and-click interaction (pointing) is frequent task in modern graphical user interfaces (GUIs), so even a marginal improvement in pointing performance can have a dramatic impact on user efficiency. Many researchers have studied pointing facilitation techniques, but these techniques typically make one of two assumptions. Either potential targets are relatively sparse on the display or there exists some technique for predicting a user's target, or endpoint, during their movement toward a target. In this talk, I will describe our work on endpoint prediction using speed signatures of human motion. This work combines two laws of human motion from psychology research, the stochastic optimized sub-movement model and the minimum jerk law, and develops an approach that makes use of these laws to extrapolate motion endpoint in computer interfaces during a gesture. Using this technique, we show that it is possible to predict a region of interest on the computer display, to expand groups of targets within that region, and to combine our technique with other prediction strategies such as command frequencies or user task modeling. Together, these results present an important step forward in realistic pointing facilitation techniques in modern GUIs. Bio:Dr. Edward Lank is an Assistant Professor in the David R. Cheriton School of Computer Science at the University of Waterloo. His research is in the area of Human-Computer Interaction (HCI), including applications of tablet computing, the study of motion kinematics in interfaces, and the design of pervasive computing applications. Prior to joining the faculty at Waterloo, Dr. Lank was an Assistant Professor of Computer Science at San Francisco State University, was a research intern at the Palo Alto Research Center in the Perceptual Document Analysis Area; was Chief Technical Officer of MediaShell Corporation, a Queen's University research start-up; and was an Adjunct Professor in the Department of Computing and Information Science at Queen's University. He received his Ph.D. in Computer Science from Queen's University in Kingston, Ontario, Canada in 2001 under the supervision of Dr. Dorothea Blostein. He also holds a Bachelor's Degree in Physics with a Minor in Computer Science from the University of Prince Edward Island. | |

| Google, Developers, and Education and A Deeper Dive into Google's Cloud: Intro to Cloud Computing and Google App Engine Abstract: A 2-talk Series a) Google, Developers, and Education (mini, non-techtalk) This brief talk discusses Google's various education initiatives for developers as well as what programs are available for academic institutions, answering questions like what the Developer Relations team that I am a part of does, how we educate our developers, various programs Google does to support higher ed institutions, the various teams at Google involved with educational and research institutions as well as the types of programs do they support on behalf of the academic community, etc. b) A Deeper Dive into Google's Cloud: Intro to Cloud Computing and Google App Engine (techtalk). While many in academia are familiar with Google's productivity suite in the cloud, Google Apps and its educational offering, there is plenty more to Google's cloud than meets the eye. This session starts out with an overview of cloud computing in general, then goes deeper into Google's cloud offerings. There is comprehensive coverage of Google App Engine, including: what it is (and is *not*), its services and feature set, some current metrics, and example user profiles. We will also introduce some of our other cloud products, all of which will not only be interesting to faculty & lecturers for use in course curricula or researchers in the lab, but also CIOs/CTOs and the IT technical staff that create the apps that help their institutions operate. The speaker is part of Google's Developer Relations team, an Engineering organization, and not part of Sales, Marketing, nor Business Development. Our role is to bring about awareness of Google developer tools and their possible applicability in your institution, thus these talks are not intended to be a "sales pitch." Bio:Wesley Chun is a Sr. Developer Advocate at Google. Wesley Chun, MSCS, is author of Prentice Hall's bestselling "Core Python" series (corepython.com), the "Python Fundamentals" companion video lectures, co-author of "Python Web Development with Django" (withdjango.com), and has written for Linux Journal, CNET, and InformIT. In addition to being a Developer Advocate at Google, he runs CyberWeb (cyberwebconsulting.com), a consultancy specializing in Python training. Wesley has over 25 years of programming, teaching, and writing experience, including more than a decade of Python. Wesley has held engineering positions at Sun, Cisco/Ironport, HP, Rockwell, and while at Yahoo!, helped create Yahoo!Mail using Python. He has delivered courses at VMware, Hitachi, LBNL, UC Santa Barbara, UC Santa Cruz, Foothill College, and makes frequent appearances on the conference circuit. Wesley holds degrees in Computer Science, Mathematics, and Music from the University of California. | |

| Thinking with Hands Abstract: The content of thought can be regarded as internalized and intermixed perceptions of the world and the actions of thought as internalized and intermixed actions on the world. Reexternalizing the content of thought onto something perceptible and reexternalizing the actions of thinking as actions of the body can facilitate thinking. New technologies can do both. They can allow creation and revision of external representations and they can allow interaction with the hands and the body. This analysis will be supported by several empirical studies. One will show that students learn more from creating visual explanations of STEM phenomena than from creating verbal ones. Another will show that conceptually congruent actions on an ipad promote arithmetic performance. A third will show that when reading spatial descriptions, students use their hands to create mental models. Bio:Barbara Tversky is a Professor Emerita of Psychology at Stanford University and a Professor of Psychology and Education at Teachers College, Columbia University. Tversky specializes in cognitive psychology, and is a leading authority in the areas of visual-spatial reasoning and collaborative cognition. Tversky's additional research interests include language and communication, comprehension of events and narratives, and the mapping and modeling of cognitive processes. Tversky received a B.A. in Psychology from the University of Michigan in 1963 and a Ph.D. in Psychology from the University of Michigan in 1969. She has served on the faculty of Stanford University since 1977 and of Teachers College, Columbia University since 2005. Tversky has led an esteemed career as a research psychologist. She has published in leading academic journals prolifically for almost four decades. Multiple of her studies are among the most significant in both cognitive psychology and experimental psychology generally. Tversky was named a Fellow of the American Psychological Society in 1995, the Cognitive Science Society in 2002, and the Society of Experimental Psychology in 2004. In 1999, she received the Phi Beta Kappa Excellence in Teaching Award. Tversky is an active and well-regarded teacher of psychology courses at both the introductory and advanced level. In addition, Tversky has served on the editorial boards of multiple prominent academic journals, including Psychological Research (1976-1984), the Journal of Experimental Psychology: Learning, Memory and Cognition (1976-1982), the Journal of Experimental Psychology: General (1982-1988), Memory and Cognition (1989-2001), and Cognitive Psychology (1995-2002). | |

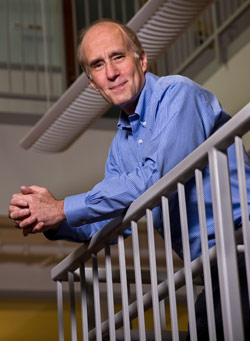

| Pen-Based Interaction in the Classroom and The Clinic Abstract: This talk will describe three projects centered around pen-based interaction. PhysInk is a system that makes it easy to demonstrate 2D behavior by sketching and directly manipulating objects on a physics-enabled stage. Unlike previous tools that simply capture the user’s animation, PhysInk captures an understanding of the behavior in a timeline. This enables useful capabilities such as causality-aware editing and finding physically-correct equivalent behavior. We envision PhysInk being used as a physics teacher’s sketchpad or a WYSIWYG tool for game designers. We have all had the experience of drawing data structures on a blackboard to illustrate the steps of an algorithm. The process is tedious and error-prone, and even when done right the result is still a collection of seashell-dust on slate. Seeking something better, we have begun developing CodeInk, a system that provides a direct manipulation language for explaining algorithms and an algorithm animation tool embodying that language. CodeInk allows instructors and/or students to describe algorithm behavior by directly manipulating objects on the drawing surface. Objects on the surface behave appropriately, i.e., as data structures, rather than simply as drawings. Finally, ClockSketch is the first member of a family of applications that may revolutionize neuropsychological testing by capturing both the test result and the behavior that produced it. By capturing data with unprecedented spatial and temporal resolution, we have discovered very subtle behaviors that offer clinically interesting clues to mental status. This offers the possibility of detecting diseases like Alzheimer’s and other forms of dementia far earlier than currently possible. Bio:In 1978, Randall Davis joined the faculty of the Electrical Engineering and Computer Science Department at MIT, where from 1979-1981 he held an Esther and Harold Edgerton Endowed Chair. He Later served for 5 years as Associate Director of the Artificial Intelligence Laboratory. He is currently a Full Professor in the Department, and a Research Director of CSAIL, the Computer Science and Artificial Intelligence Laboratory that resulted from the merger of the AI Lab and the Lab for Computer Science. He and his research group are developing advanced tools that permit natural, sketch-based interaction with software, particularly for computer-aided design and design rationale capture. Dr. Davis has been one of the seminal contributors to the field of knowledge-based systems, publishing some 50 articles and playing a central role in the development of several systems. He serves on several editorial boards, including Artificial Intelligence, AI in Engineering, and the MIT series in AI, and was selected in 1984 as one of America’s top 100 scientists under the age of 40 by Science Digest. In 1986, he received the AI Award from the Boston Computer Society for his contributions to the field. In 1990, he was named a Founding fellow of the American Association for AI and in 1995 was elected to a two-year term as President of the Association. In 2003, he received MIT’s Frank E. Perkins Award for graduate advising. From 1995-1998, he served on the Scientific Advisory Board of the U.S. Air Force. | |

| Perspectives on Microsoft OneNote and Education Abstract: The talk will cover interesting aspects of OneNote history, and particularly the highlights and challenges of productizing ink and tablet experiences in Microsoft applications with the goal of broad reach. It will also cover the exciting uses of tablets and ink we are seeing in education and beyond, and the perspective on the future potential of digital ink and corresponding developments at Microsoft. Bio:Olya Veselova graduated with her MS from MIT in 2004 under Dr. Randall Davis where she did her thesis on examining gestalt principles in sketching. She is now the Project Manager for Microsoft OneNote. | |

| Building and Evaluating Creative Interaction Abstract: Visionaries in Computer Science have long seen the computer as a tool to augment our intellect. However, while it is relatively straightforward to measure the impact of a tool or technique on task efficiency for well-defined tasks, it is much more difficult to measure computers' impact on higher-level cognitive processes, such as creative processes. In my own research in Human-Computer Interaction, I create novel interaction techniques, but run up against the problem of trying to demonstrate how these tools positively impact higher-level processes such as creativity, expressiveness and exploration. In this talk, I first present a variety of interaction techniques that I have developed, and I then describe a new survey metric, the Creativity Support Index (CSI), that we have developed to help researchers and designers evaluate the level of creativity support provided by various systems, tools or interfaces. I will discuss what has been learned during the process of creating this survey and its usage in three different studies. The Creativity Support Index is one of the very first indices to support any evaluation of a computer system's impact on higher-level cognitive work. I will discuss the CSI within the context of my longer term goal to develop a suite of tools (including biometric tools) that provide both stronger analytical power, and a fundamental framework for evaluating computational support for creative activities, engagement and aesthetic experience. Bio:Dr. Celine Latulipe has a PhD in Computer Science from the University of Waterloo in Canada. She is an Assistant Professor of Human-Computer Interaction in the Department of Software and Information Systems in the College of Computing and Informatics at UNC Charlotte. Dr. Latulipe has long been fascinated by two-handed interaction in the real world, and the absence of it in the human-computer interface. She has developed numerous individual and collaborative two-handed interaction techniques and these have blossomed into an exploration of creative expression. Dr. Latulipe works on projects with choreographers, dancers, artists and theatre producers to better understand creative work in practice and how technology may play a role in supporting and evaluating creative work practices. Currently, Dr. Latulipe is working on the Dance.Draw project, funded by an NSF CreativeIT grant. | |

| The Museum as an Arena for Multidisciplinary research: The PIL Museum Visitors’ Guide Project Abstract: In recent years there has been considerable research focusing on intelligent museum visitor’s guides. The research mainly focused on supporting the individual visitor by providing personalized information in a context aware manner. This research mainly exploited the use of novel technological developments for better supporting individual museum visitors. This included: multimedia as a presentation tool, novel positioning technologies (such as infra red, RFID, WiFi, and more), presentation technologies/devices and more. However, people tend to visit museums in groups – organized tours, small groups of friends, families etc. It seems that the time is right to move now towards supporting groups of visitors in the museum. In the framework of the “PIL” project (PEACH-Israel, Italian-Israeli collaboration), a museum visitors’ guide system is developed. As part of the research, a methodology for multimedia presentations preparation has been developed, the idea of ubiquitous user modeling was defined and one of its techniques was applied for bootstrapping an on-site user model for the visitor, and a system was designed to enhance interaction among museum visitors (and thus enhance learning) by providing inter-group context aware communication mechanisms. In addition to supporting individuals, the system supports the whole group by providing time-trigger based recommendations for exhibits and presentations as the visit time is exhausted. The talk will present the research involved with the mobile museum visitor’s guide developed within the PIL project and focus on the demonstration of the context aware interaction mechanism and on the demonstration of the group support as examples for context aware services in active museum. Bio:Tsvi Kuflik has been at the University of Haifa, Israel since 2004 and prior to this he has been with ITC-irst, since 2003, working on user modeling in "Active Museum". At the University of Haifa, Tsvi works on various research aspects related to "Active Museum", such as User Modeling, Intelligent User Interfaces, Context Awareness, Content Preparation and Multi Agents Software Engineering (in this work Tsvi combines together recent research interests with 20 years of experience in software and system engineering). This work is done in the framework of the collaboration between the University of Haifa (Israel) and ITC-irst (Trento, Italy), where research results and know-how are transferred from ITC-irst to Haifa and extended with local research. Tsvi’s research interests include user modeling, information filtering and retrieval, machine learning applications, decision support systems and software engineering. | |

| Ultra-Low Power Asynchronous Digital Circuits Abstract: he International Technology Roadmap for Semiconductors (ITRS) predicts that asynchronous (clockless) circuits will account for approximately 50% of the multi-billion dollar semiconductor industry by 2024. ITRS has identified leakage power consumption as one of the top three overall challenges for the last 5 years, and has identified it as a clear long term threat and a focus topic for design technology in the next 15 years. Among the many techniques proposed to control or minimize leakage power in deep submicron technology, Multi-Threshold CMOS (MTCMOS), which reduces leakage power by disconnecting the power supply from the circuit during idle (or sleep) mode while maintaining high performance in active mode, is very promising. This talk will provide an introduction to asynchronous logic, NULL Convention Logic (NCL), and Multi-Threshold CMOS (MTCMOS), and then detail how the MTCMOS technique is combined with NCL to yield a fast ultra-low power asynchronous circuit design methodology, called Multi-Threshold NULL Convention Logic (MTNCL), which vastly outperforms traditional NCL in all aspects (i.e., area, speed, energy, and leakage power), and significantly outperforms the MTCMOS synchronous architecture in terms of area, energy, and leakage power, although the MTCMOS synchronous design can operate faster. Bio:Scott C. Smith received B.S. degrees in Electrical Engineering and Computer Engineering and the M.S. degree in Electrical Engineering from the University of Missouri - Columbia in 1996 and 1998, respectively, and the Ph.D. degree in Computer Engineering from the University of Central Florida, Orlando, in 2001. He started as an Assistant Professor at the University of Missouri - Rolla (now called Missouri University of Science & Technology) in August 2001, was promoted to Associate Professor in March 2007 (effective September 2007), and is currently an Associate Professor at University of Arkansas, Fayetteville. He has authored more than 50 refereed publications, 3 issued U.S. patents and 3 pending, and a book on "Designing Asynchronous Circuits using NULL Convention Logic (NCL)," all of which can be viewed from his website: http://comp.uark.edu/~smithsco/. Additionally, Dr. Smith has procured over $3.25M in funding from a variety of government agencies and private industry, and is a co-owner of two IC design companies located in Fayetteville, AR. His research interests include computer architecture, asynchronous logic design, CAD tool development, embedded system design, VLSI, FPGAs, trustable hardware, self-reconfigurable logic, and wireless sensor networks. Dr. Smith is a Senior Member of IEEE, and a Member of Sigma Xi, Eta Kappa Nu, Tau Beta Pi, and ASEE. | |

| Computing and reducing spatial uncertainty in geocoded data Abstract: Spatial data are used in all aspects of research, practice, and policy-making ranging from location-based services for engaging potential customer-bases, to linking environmental exposures to human health outcomes, to planning for and responding to natural disasters. Unless obtained as a primary source through field work or direct observation, these data are often computationally-derived through processes such as natural language processing (NLP) of text documents or transcriptions, spatial integration of heterogeneous multi-modal data sources/sensors, or by the spatial interpolation of values over an area from a set of known observations. In all cases, quantitative representations of spatial accuracy and uncertainty are paramount to fitness-for-use estimations as well as for determining spatial confidence intervals for the results of subsequent operations or decisions made using these data. This talk will explore the development of innovative computationally-based approaches for quantifying, representing, and reducing the uncertainty of spatial information derived through the process of geocoding, or translating location descriptions into digital geographic representations. This process involves a series of steps including NLP, probabilistic record-linkage, and spatial interpolation, among others, each of which produce and contribute non-stationary spatial error distributions independently and in concert to the resulting output. One goal of this research is to overcome the limitations of current metrics that describe the "quality" of geocoded data as qualitative classifications because these measures cannot be used by scientists or end-users in meaningful ways. Alternative measures are developed which rely on the quantification and propagation of uncertainty for each internal component of the geocoding process. Using these measures, algorithmic approaches for reducing spatial uncertainty will be presented which leverage spatial relationships, operators, and knowledge drawn from regional and local artifacts of the data available to a geocoding system. Bio:Dr. Daniel W. Goldberg is an Assistant Professor of Geography at Texas A&M University. Dr. Goldberg holds BS, MS, and PhD degrees in Computer Science, the latter two from the University of Southern California and the former from Rutgers University in the Great State of New Jersey. He was most recently the Associate Director of the GIS Laboratory at USC. Dr. Goldberg’s research interests lie at the intersection of Computer Science and GIScience, a niche where he uses CS theory to advance GIS data structures, algorithms, and practices for applications within the Health and Social Sciences on topics ranging from environmental exposure assessment to the construction of techniques and systems for integrating and analyzing heterogeneous spatio-temporal data sources such as disease and information propagation. He has published widely on the development and application of geocoding techniques and is currently focusing on creating methods to compute and represent spatial uncertainty in geocoded data, and the means by which these uncertainties can be propagated to and within environmental health models. | |

| Using Human Perception to Automatically Generate Sketch Recognition Systems Abstract: Sketching is a natural modality of human-computer interaction for a variety of tasks (e.g., conceptual design), and sketch recognition systems are currently being developed for many domains. However, they require signal-processing expertise and are time consuming to build, if they are to handle the intricacies of each domain. We want to enable user interface designers and experts in the domain itself to be able to build these systems, rather than sketch recognition experts. I created and implemented a new framework in which developers can specify a domain description indicating how domain shapes are to be identified, displayed and edited; the system then automatically generates a sketch recognition user interface for that domain. LADDER, a language using a perceptual vocabulary based on Gestalt principles and my own user studies, was developed to effectively describe how to recognize, display, and edit domain shapes. A translator and a customizable recognition system are combined with a domain description to automatically create a domain specific recognition system. With this new technology, developers will be able to write a domain description to create a new sketch interface for a domain, greatly reducing the time and expertise needed to create a new sketch interface. However, it is more natural for a user to specify a shape by drawing it than editing a text. Human perception can be used to create computer-generated descriptions from a single description. But, human and computer generated descriptions may be flawed. Thus, I created a modification of the traditional model of active learning in which the system selectively generates its own (near-miss) examples, and uses the teacher as a source of labels. System generated near-misses offer a number of advantages. Human generated examples are tedious to create and may not expose problems in the current concept. It seems most effective for the near-miss examples to be generated by whichever learning participant (teacher or student) knows better where the deficiencies lie; this will allow the concepts to be more quickly and effectively refined. When working in a closed domain such as this one, the computer learner knows exactly which conceptual uncertainties remain, and which hypotheses need to be tested and confirmed. The system uses these labeled examples to automatically build a LADDER shape description using my modification of the version spaces algorithm, which handles interrelated constraints and has the ability to also learn negative and disjunctive constraints. Bio:Tracy Hammond is an assistant professor at Texas A&M University with a focus on human perception, sketch recognition, computer human interaction, and learning. She earned the B.A. in math, the B.S. in applied math, the M.S. in computer science, the M.A. in anthropology from Columbia University and the PhD in computer science from MIT. Previously, she taught for five years at Columbia University, and she was a telecom analyst for four years at Goldman Sachs, where she designed, developed, implemented, and administers global computer telephony applications. | |

| Is Sketch Recognition (Still) An Issue of Artificial Intelligence? Abstract: Extracting information from a human and getting it into the computer as data has typically lagged behind the remarkable progress that has been made in manipulating and displaying that same data. This gap may be due to the fundamentally different way that humans and computers process input, an issue that was first identified in the 1970s. There have been two basic approaches to solving the problem: requiring the user to style the input in a way that is adapted to the computer or extracting features from the user's free-form input. Herot's early work in sketch recognition explored the latter approach, leading to the hypothesis that further progress would require advances in artificial intelligence (AI). This talk looks back at the problems that were identified then and explores whether AI is the answer today. Bio:Christopher Herot is an entrepreneur in the field of digital media and communication. He is currently Chief Product Officer for VSee Labs, Inc. and a consultant to the entertainment and communications industries. He has founded three software companies and directed the Advanced Technology Group at Lotus Development Corporation. His interests include collaborative systems, digital audio and video, and wireless communications, all of which grew out of his work in sketch recognition at the MIT Architecture Machine, the group that became the MIT Media Laboratory. Herot received a MS in Electrical Engineering and an BS in Art & Design from MIT. | |

| Understanding, Designing and Developing Natural User Interactions for Children Abstract: The field of Natural User Interaction (NUI) focuses on allowing users to interact with technology through the range of human abilities, such as touch, voice, vision and motion. Children are still developing their cognitive and physical capabilities, creating unique design challenges and opportunities for interacting in these modalities. This talk will describe Lisa Anthony's research expertise in understanding children's expectations and abilities with respect to NUIs and designing and developing new multimodal NUIs for children in a variety of contexts, including education, healthcare and serious games. Examples of projects she will present are recent work in understanding characteristics of children using touch and gesture interaction on mobile devices and past work in designing natural educational interactions for children. Bio:Lisa Anthony is presently a post-doctoral research associate in the Information Systems Department at the University of Maryland Baltimore County (UMBC). She holds an MS in Computer Science (Drexel University, 2002), a second MS in Human Computer Interaction (Carnegie Mellon University, 2006), and a PhD in Human-Computer Interaction (Carnegie Mellon University, 2008). Her research interests include understanding how children can make use of advanced interaction techniques, with a special emphasis on pen and gesture interaction, for desktop and mobile applications in education, healthcare, and serious games. Her PhD dissertation topic investigated the use of handwriting input for middle school math tutoring software, and her simple and accurate multistroke gesture recognizer called $N is well-known in the field. | |

| Fluid Interaction in Pen/Tablet Interfaces Abstract: While pen/tablet computers promise a user experience that moves fluidly between input, editing, and program control, this promise is rarely realized due to usability shortcomings of current pen/tablet interfaces. In this talk, I will discuss our research on improving interaction on pen computers, including research on sketching on small screens, the paper digital divide, sloppy selection, and minimizing modes. The overall theme of this research thrust has been to analyze measurable parameters of users' actions in interfaces as an indicator of users' intentions. By understanding what users are trying to accomplish, we hope to design interfaces that speed interaction, reduce user errors, and provide a computing experience tailored to the user's current goals. Bio:Dr. Edward Lank is an Assistant Professor in the David R. Cheriton School of Computer Science at the University of Waterloo. His research is in the area of Human-Computer Interaction (HCI), including applications of tablet computing, the study of motion kinematics in interfaces, and the design of pervasive computing applications. Prior to joining the faculty at Waterloo, Dr. Lank was an Assistant Professor of Computer Science at San Francisco State University (2002 - 2006), was a research intern at the Palo Alto Research Center in the Perceptual Document Analysis Area (2001); was Chief Technical Officer of MediaShell Corporation, a Queen's University research start-up (2000 - 2001); and was an Adjunct Professor in the Department of Computing and Information Science at Queen's University (1997 - 2001). He received his Ph.D. in Computer Science from Queen's University in Kingston, Ontario, Canada in 2001 under the supervision of Dr. Dorothea Blostein. He also holds a Bachelor's Degree in Physics with a Minor in Computer Science from the University of Prince Edward Island. | |

| Researching Engineering Education: Some Philosophical Considerations Abstract: Enhancements to teaching, learning and assessment in engineering require the underpinning of research and scholarship into the academic practice of engineering education as well as that of disciplined based research, if they are to lead to lasting benefits for students. Research into engineering education can take different forms ranging from large scale multi-institutional studies to cross-institutional impact analysis to individual teachers undertaking action research and can often be challenging, requiring an understanding of not only how students learn but also an understanding of educational research methods. There are a variety of different and often contradictory approaches to educational research which can make it difficult for the novice researcher to decide which one to select for a given study. Indeed many academics, when first embarking on research into education, naturally draw upon the research methods associated with their own discipline. However the most appropriate method for researching a given subject is not necessarily the most appropriate for researching the education of that subject. A case study is presented on the experiences of one researcher into engineering education research, as they diversified from methods rooted in their own discipline into methods borrowed from other disciplines. Consideration of underlying philosophical concepts frames this journey in a wider context, allowing key concerns to be explored. These concerns include the value of controlled experiments in the context of engineering education and the use of interpretativist approaches to illuminate particular situations. The importance of understanding different methodologies, and the claims that they can support, will be discussed, both in the context of conducting one's own research but also in the context of interpreting the work of others, thereby enabling researchers to take a more critical approach. This is of particular importance when seeking to apply findings of others to one's own practice as well as placing one's own research in an appropriate theoretical context. Ethical issues are also considered. Educational research should be prompted by a desire to improve the common good and should seek to minimise harm to learners. Ethical considerations should inform both the conduct of the research and the dissemination of findings. This can prove problematic when undertaking practitioner-based research. Key aspects will be explored. These reflections will be useful to others embarking on their own research projects. Drawing upon key western philosophical traditions, social science theories and pedagogy, the paper argues that an understanding of key aspects of philosophy, in particular consideration of "what is knowledge?" and "how we come to know" can improve the design of investigations into how students learn, and how that learning can be enhanced. Bio:Dr Sandra Cairncross was appointed as Dean of Engineering, Computing & Creative Industries in April 2008. Prior to that she was Associate Dean with responsibility for Academic Quality and Customer Service. Her role is to lead the strategic development of the Faculty, building on its excellent track record in supporting Scotland's knowledge based economy through providing a portfolio of academic programmes and engaging in research and knowledge transfer which are relevant to the needs of students, business and industry and other stakeholders. Dr Cairncross is Chartered Engineer and a member of the Institute of Educational Technology and a Fellow of the Higher Education Academy with a background in Interactive Media Design. She is a Senior Teaching Fellow at Edinburgh Napier and the focus of her doctoral students was how best to use new learning technologies to enhance teaching and learning and the student experience and has published in this area. | |

| Steps Toward Natural Interaction Abstract: Drawings, sketches, and diagrams of many sorts are ubiquitous in engineering education and practice, providing a powerful way to envision, explain, and reason. Yet historically diagrams have been static, passive pictures, comprehensible only to human observers. We aim to change that: we want to create a kind of "magic paper " that understands what is being drawn. We want to make it possible for a variety of surfaces to behave as that "paper," ranging from active whiteboards, to tablet computers, to desktops in classrooms and benches in laboratories. This is a vision of computing that spans the range from tablet computer notebooks full of magic paper to a new view of desktop computing: your (physical) desktop should compute. More generally yet, we want people to be able to sketch, gesture, and talk about their ideas in the way they do when interacting with each other, and have the "paper" understand the messy freehand sketches, casual gestures, and fragmentary utterances that are part and parcel of such interaction. Once this happens, a variety of powerful next steps are possible: a sketch of a design for a mechanical device, for example, might be simulated to display its behavior, analyzed (e.g., for structural soundness), or critiqued (e.g., for manufacturability). We want to produce systems for a variety of domains, including mechanical engineering, software architecture (e.g., UML diagrams), and digital electronic schematics, as well as sets of tools that will make it simple for others to create sketch understanding systems in additional domains. To date we have produced a demonstration system and a substantial foundation for the architecture of the next generation of this system. The proposed work will carry us through to the completion of that next generation architecture. Bio:Dr. Davis is an expert in sketch recognition and runs the Design Rationale Group at MIT. Dr. Davis was Dr. Hammond's research advisor, as well as the advisor of many world-renowned sketch recognition faculty across the world including previous visitors Dr. Christine Alvarado of Harvey Mudd and Dr. Metin Sezgin of Cambridge University/Koc University. |