Assignments

The course's assignments apply an array of AI techniques to playing Pac-Man. However, these assignments don't focus on building AI for video games. Instead, they teach foundational AI concepts, such as informed state-space search, probabilistic inference, and reinforcement learning. These concepts underly real-world application areas such as natural language processing, computer vision, and robotics.

The assignments allow you to visualize the results of the techniques you implement. They also contain code examples and clear directions, but do not force you to wade through undue amounts of scaffolding. Finally, Pac-Man provides a challenging problem environment that demands creative solutions; real-world AI problems are challenging, and Pac-Man is too.

Technical Notes

The Pac-Man assignments are written in pure Python 3.6+ and do not depend on any packages external to a standard Python distribution, except the Logic and ML assignments.

Help

Questions, comments, and clarifications regarding the assignments should NOT be sent via email to the course staff. Please use the class discussion board on Canvas instead.

Submission

Each student is required to submit their own unique solution i.e., assignments should be completed alone. Assignments will by default be graded automatically for correctness, though we will review submissions individually as necessary to ensure that they receive the credit they deserve. Assignments can be submitted as often as you like before the deadline; we strongly encourage you to keep working until you get a full score by the autograder.

Credits

The assignments were developed by John DeNero, Dan Klein, and Pieter Abbeel at UC Berkeley.

This short tutorial introduces students to setup examples, the Python programming language, and the autograder system. Assignment 0 will cover the following:

- Instructions on how to set up Python,

- Workflow examples,

- A mini-Python tutorial,

- Assignment grading: Every assignment's release includes its autograder that you can run locally to debug. When you submit, the same autograder is ran.

Files to Edit and Submit: You will fill in

portions of addition.py,

buyLotsOfFruit.py,

and shopSmart.py

in tutorial.zip during the assignment. Once you have

completed the assignment, you will submit ONLY these files to Canvas zipped into a single

file.

Please DO NOT change/submit any other files.

Evaluation: Your code will be autograded for technical correctness. Please do not change the names of any provided functions or classes within the code, or you will wreak havoc on the autograder. However, the correctness of your implementation - not the autograder's judgements - will be the final judge of your score. If necessary, we will review and grade assignments individually to ensure that you receive due credit for your work.

Academic Dishonesty: We will be checking your code against other submissions in the class for logical redundancy. If you copy someone else's code and submit it with minor changes, we will know. These cheat detectors are quite hard to fool, so please don't try. We trust you all to submit your own work only; please don't let us down. If you do, we will pursue the strongest consequences available to us.

Python Installation

You need a Python (3.6 or higher) distribution and either Pip or Conda.

To check if you meet our requirements, you should open the terminal

run python -V

and see that the version is high enough. Then, run

pip -V and

conda -V

and see that at least one of these works and prints out some version

of the tool. On some systems that also have Python 2 you may have to

use python3

and pip3

instead of the aforementioned.

If you need to install things, we recommend Python 3.9 and Pip for simplicity. If you already have Python, you just need to install Pip.

On Windows either Windows Python or WSL2 can be used. WSL2 is really nice and provides a full Linux environment, but takes up more space; Linux/ MacOS (both Unix) is used much more often for computer science than Windows because it is more convenient and development tools are also more reliable. It doesn't make a difference for this class but is a good tool to learn to use if you are interested and have the time.

If you choose to use Conda via Anaconda or Miniconda, these already come with Python and Pip so you would install just the one thing.

- Install Python:

- For Windows and MacOS, we recommend using an official graphical installer: download and install Python 3.9.

- For Linux, use a package manager to install Python 3.9.

- Install Pip:

- For Windows and MacOS, run

python -m ensurepip --upgrade. - For Linux, use a package manager to install Pip for Python 3.

- For Windows and MacOS, run

Dependencies installation

First, go through the Autograding section to understand how to work

with tutorial.zip and the

autograder.py

inside.

The machine learning assignment has additional dependencies. It's important to install them now so that if there is an issue with the Python installation, we don't have to come back or redo the installation later.

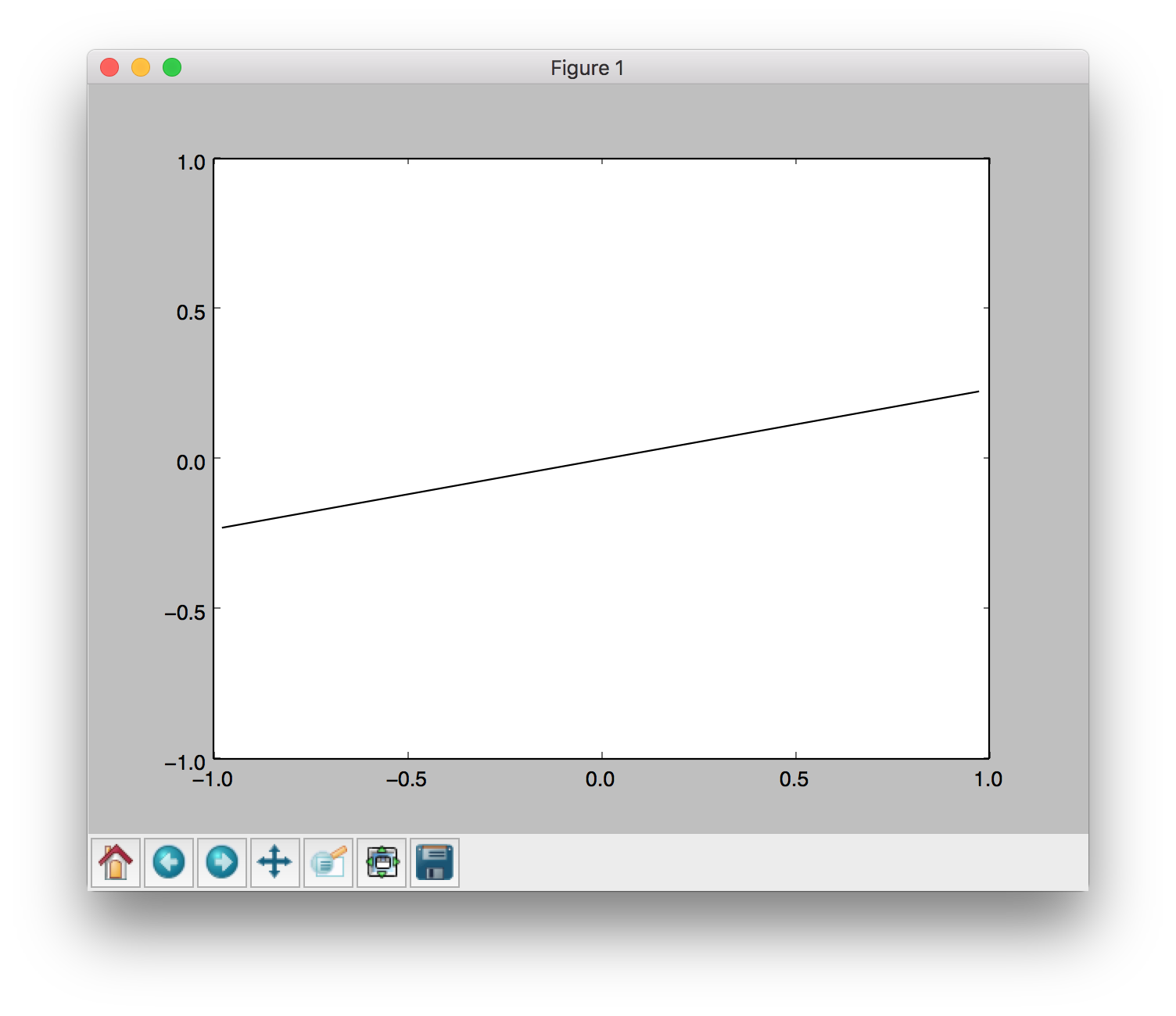

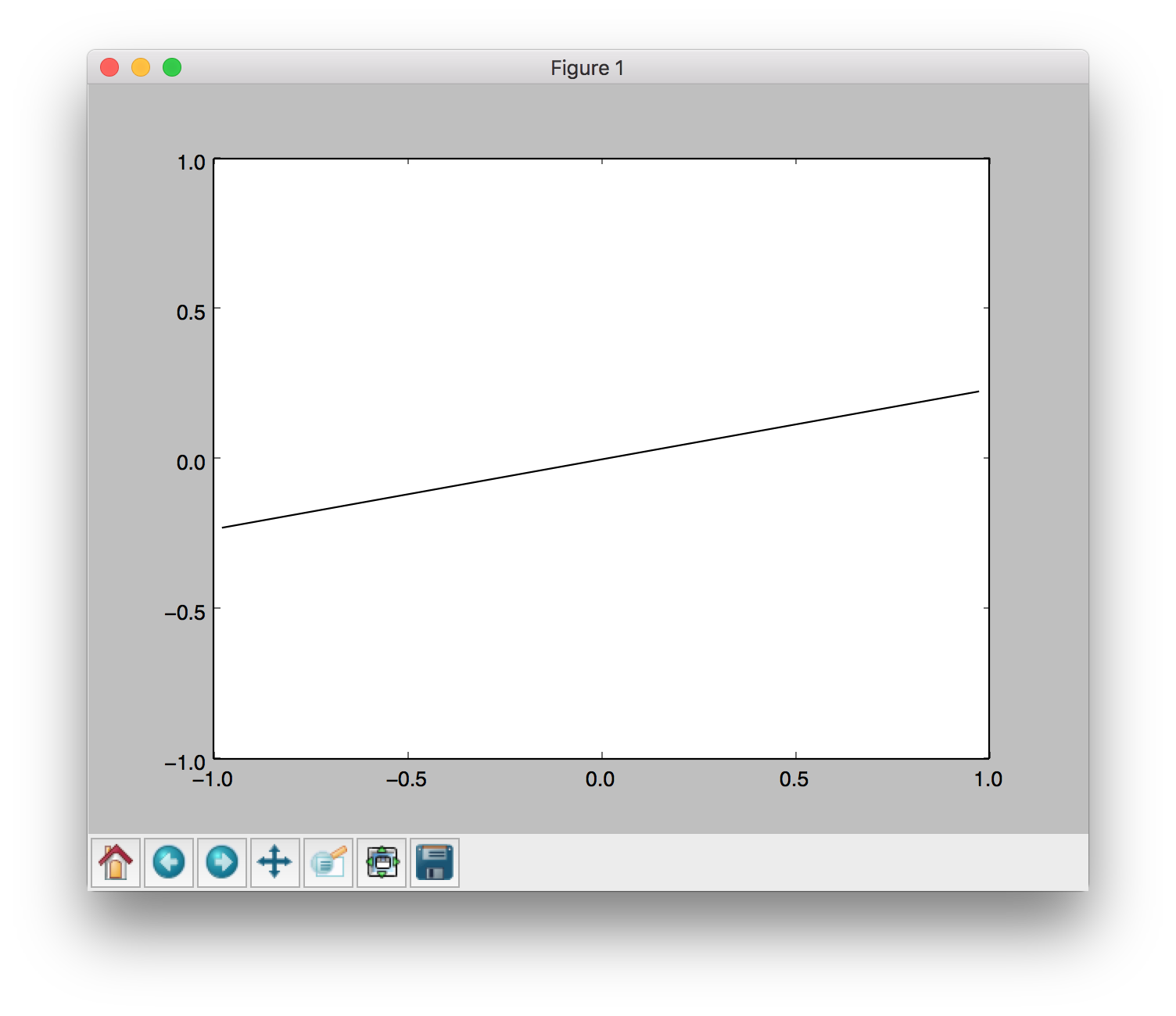

On Conda installations, the dependencies should already be there. You can test by confirming that this command produces the below window pop up where a line segment spins in a circle.

python autograder.py --check-dependencies

The libraries needed and the corresponding commands are:

- numpy, which provides support for fast, large multi-dimensional arrays.

- matplotlib, a 2D plotting library.

pip install numpy

pip install matplotlib

After these, use the python autograder.py --check-dependencies command to

confirm that everything works.

Troubleshooting

Some installations will have python3

and pip3

refer to what we want to use. Also, there may by multiple

installations of python that may complicate which commands install

where.

python -V # version of python

pip -V # version of pip, and which python it is installing to

which python # where python is

If there is a tkinter

import error, it's likely because Python is atypical, and from

Homebrew. Uninstall that and install python from Homebrew with

tkinter

support, or use the recommended graphical installer.

Workflow/ Setup Examples

You are not expected to use a particular code editor or anything, but here are some suggestions on convenient workflows (you can skim both for half a minute and choose the one that looks better to you):

- GUI and IDE, with VS Code shortcuts. You are highly encouraged to read the Using an IDE section if using an IDE to learn convenient features.

- In terminal, using Unix commans and Emacs (this is fine to do on Windows too). Useful to be able to edit code on any machine without setup, and remote connecting setups such as using the instructional machines.

Python Basics

If you're new to Python or need a refresher, we recommend going through the Python basics tutorial.

Autograding

To get you familiarized with the autograder, we will ask you to code, test, and submit your code after solving the three questions.

You can download all of the files associated the autograder tutorial as a zip archive: tutorial.zip. Unzip this file and examine its contents:

addition.py

autograder.py

buyLotsOfFruit.py

grading.py

projectParams.py

shop.py

shopSmart.py

testClasses.py

testParser.py

test_cases

tutorialTestClasses.py

This contains a number of files you'll edit or run:

addition.py: source file for question 1buyLotsOfFruit.py: source file for question 2shop.py: source file for question 3shopSmart.py: source file for question 3autograder.py: autograding script (see below)

and others you can ignore:

test_cases: directory contains the test cases for each questiongrading.py: autograder codetestClasses.py: autograder codetutorialTestClasses.py: test classes for this particular assignmentprojectParams.py: assignment parameters

The command python autograder.py grades your

solution to all three problems. If we run it before editing any files we get a page or two of output:

python autograder.py Should look as follows:

[~/tutorial]$ python autograder.py

Starting on 1-21 at 23:39:51

Question q1

===========

*** FAIL: test_cases/q1/addition1.test

*** add(a, b) must return the sum of a and b

*** student result: "0"

*** correct result: "2"

*** FAIL: test_cases/q1/addition2.test

*** add(a, b) must return the sum of a and b

*** student result: "0"

*** correct result: "5"

*** FAIL: test_cases/q1/addition3.test

*** add(a, b) must return the sum of a and b

*** student result: "0"

*** correct result: "7.9"

*** Tests failed.

### Question q1: 0/1 ###

Question q2

===========

*** FAIL: test_cases/q2/food_price1.test

*** buyLotsOfFruit must compute the correct cost of the order

*** student result: "0.0"

*** correct result: "12.25"

*** FAIL: test_cases/q2/food_price2.test

*** buyLotsOfFruit must compute the correct cost of the order

*** student result: "0.0"

*** correct result: "14.75"

*** FAIL: test_cases/q2/food_price3.test

*** buyLotsOfFruit must compute the correct cost of the order

*** student result: "0.0"

*** correct result: "6.4375"

*** Tests failed.

### Question q2: 0/1 ###

Question q3

===========

Welcome to shop1 fruit shop

Welcome to shop2 fruit shop

*** FAIL: test_cases/q3/select_shop1.test

*** shopSmart(order, shops) must select the cheapest shop

*** student result: "None"

*** correct result: "<FruitShop: shop1>"

Welcome to shop1 fruit shop

Welcome to shop2 fruit shop

*** FAIL: test_cases/q3/select_shop2.test

*** shopSmart(order, shops) must select the cheapest shop

*** student result: "None"

*** correct result: "<FruitShop: shop2>"

Welcome to shop1 fruit shop

Welcome to shop2 fruit shop

Welcome to shop3 fruit shop

*** FAIL: test_cases/q3/select_shop3.test

*** shopSmart(order, shops) must select the cheapest shop

*** student result: "None"

*** correct result: "<FruitShop: shop3>"

*** Tests failed.

### Question q3: 0/1 ###

Finished at 23:39:51

Provisional grades

==================

Question q1: 0/1

Question q2: 0/1

Question q3: 0/1

------------------

Total: 0/3

Your grades are NOT yet registered. To register your grades, make sure

to follow your instructor's guidelines to receive credit on your assignment.

For each of the three questions, this shows the results of that question's tests, the questions grade, and a final summary at the end. Because you haven't yet solved the questions, all the tests fail. As you solve each question you may find some tests pass while other fail. When all tests pass for a question, you get full marks.

Looking at the results for question 1, you can see that it has failed three tests with the error message "add(a, b) must return the sum of a and b". The answer your code gives is always 0, but the correct answer is different. We'll fix that in the next tab.

Q1: Addition

Open addition.py

and look at the definition of add:

def add(a, b):

"Return the sum of a and b"

"*** YOUR CODE HERE ***"

return 0

The tests called this with a and b set to different values, but the code always returned zero. Modify this definition to read:

def add(a, b):

"Return the sum of a and b"

print("Passed a = %s and b = %s, returning a + b = %s" % (a, b, a + b))

return a + b

Now rerun the autograder (omitting the results for questions 2 and 3):

[~/tutorial]$ python autograder.py -q q1

Starting on 1-22 at 23:12:08

Question q1

===========

*** PASS: test_cases/q1/addition1.test

*** add(a,b) returns the sum of a and b

*** PASS: test_cases/q1/addition2.test

*** add(a,b) returns the sum of a and b

*** PASS: test_cases/q1/addition3.test

*** add(a,b) returns the sum of a and b

### Question q1: 1/1 ###

Finished at 23:12:08

Provisional grades

==================

Question q1: 1/1

------------------

Total: 1/1

You now pass all tests, getting full marks for question 1. Notice

the new lines "Passed a=…" which appear before "*** PASS: …". These

are produced by the print statement in add.

You can use print statements like that to output information useful for debugging.

Q2: buyLotsOfFruit function

Implement the buyLotsOfFruit(orderList)

function in buyLotsOfFruit.py

which takes a list of (fruit,numPounds)

tuples and returns the cost of your list. If there is some

fruit in the list which doesn't appear in

fruitPrices it should print an error message and return

None.

Please do not change the fruitPrices

variable.

Run python autograder.py

until question 2 passes all tests and you get full marks. Each test

will confirm that

buyLotsOfFruit(orderList) returns the correct answer given

various possible inputs. For example,

test_cases/q2/food_price1.test tests whether:

Cost of

[('apples', 2.0), ('pears', 3.0), ('limes', 4.0)] is 12.25

Q3: shopSmart function

Fill in the function shopSmart(orderList,fruitShops)

in shopSmart.py,

which takes an orderList (like the kind passed in to

FruitShop.getPriceOfOrder)

and a list of FruitShop

and returns the FruitShop

where your order costs the least amount in total. Don't change the

file name or variable names, please. Note that we will provide the

shop.py

implementation as a "support" file, so you don't need to submit yours.

Run python autograder.py

until question 3 passes all tests and you get full marks. Each test

will confirm that shopSmart(orderList,fruitShops)

returns the correct answer given various possible inputs. For

example, with the following variable definitions:

orders1 = [('apples', 1.0), ('oranges', 3.0)]

orders2 = [('apples', 3.0)]

dir1 = {'apples': 2.0, 'oranges': 1.0}

shop1 = shop.FruitShop('shop1',dir1)

dir2 = {'apples': 1.0, 'oranges': 5.0}

shop2 = shop.FruitShop('shop2', dir2)

shops = [shop1, shop2]

test_cases/q3/select_shop1.test tests whether:

shopSmart.shopSmart(orders1, shops) == shop1

and test_cases/q3/select_shop2.test tests

whether: shopSmart.shopSmart(orders2, shops) == shop2

Submission

In order to submit your assignment, please upload the following files to

Canvas -> Assignments -> Assignment 0: addition.py,

buyLotsOfFruit.py,

and shopSmart.py. Upload the files in a single zip

file with your UIN as the file name i.e., <your UIN>.zip.

Students implement depth-first, breadth-first, uniform cost, and A* search algorithms. These algorithms are used to solve navigation and traveling salesman problems in the Pacman world.

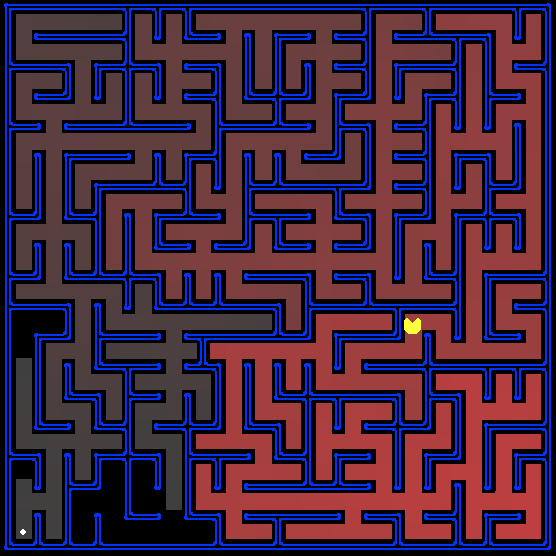

In this assignment, your Pacman agent will find paths through his maze world, both to reach a particular location and to collect food efficiently. You will build general search algorithms and apply them to Pacman scenarios.

As in Assignment 0, this assignment includes an autograder for you to grade your answers on your machine. This can be run with the command:

python autograder.pySee the autograder tutorial in Assignment 0 for more information about using the autograder.

The code for this assignment consists of several Python files, some of which you will need to read and understand in order to complete the assignment, and some of which you can ignore. You can download all the code and supporting files as a search.zip.

Files you'll edit:

search.py: where all of your search algorithms will reside.searchAgents.py: where all of your search-based agents will reside.

Files you might want to look at:

pacman.py: the main file that runs Pacman games. This file describes a Pacman GameState type, which you use in this assignment.game.py: the logic behind how the Pacman world works. This file describes several supporting types like AgentState, Agent, Direction, and Grid.util.py: useful data structures for implementing search algorithms.

Supporting files you can ignore:

graphicsDisplay.py: graphics for PacmangraphicsUtils.py: support for Pacman graphicstextDisplay.py: ASCII graphics for PacmanghostAgents.py: agents to control ghostskeyboardAgents.py: keyboard interfaces to control Pacmanlayout.py: code for reading layout files and storing their contentsautograder.py: assignment autogradertestParser.py: parses autograder test and solution filestestClasses.py: general autograding test classestest_cases/: directory containing the test cases for each questionsearchTestClasses.py: assignment 1 specific autograding test classes

Files to Edit and Submit: You will fill in portions of search.py and searchAgents.py during the assignment. Once you have

completed the assignment, you will submit ONLY these files to Canvas zipped into a single

file.

Evaluation: Your code will be autograded for technical correctness. Please do not change the names of any provided functions or classes within the code, or you will wreak havoc on the autograder. However, the correctness of your implementation - not the autograder's judgements - will be the final judge of your score. If necessary, we will review and grade assignments individually to ensure that you receive due credit for your work.

Academic Dishonesty: We will be checking your code against other submissions in the class for logical redundancy. If you copy someone else's code and submit it with minor changes, we will know. These cheat detectors are quite hard to fool, so please don't try. We trust you all to submit your own work only; please don't let us down. If you do, we will pursue the strongest consequences available to us.

Getting Help: You are not alone! If you find yourself stuck on something, contact the course staff for help. Office hours and the course Campuswire are there for your support; please use them. We want these assignments to be rewarding and instructional, not frustrating and demoralizing.

Welcome to Pacman

After downloading the code, unzipping it, and changing to the directory, you should be able to play a game of Pacman by typing the following at the command line:

python pacman.pyPacman lives in a shiny blue world of twisting corridors and tasty round treats. Navigating this world efficiently will be Pacman's first step in mastering his domain.

The simplest agent in searchAgents.py is called

the GoWestAgent, which always goes West (a trivial

reflex agent). This agent can occasionally win:

python pacman.py --layout testMaze --pacman GoWestAgentBut, things get ugly for this agent when turning is required:

python pacman.py --layout tinyMaze --pacman GoWestAgentIf Pacman gets stuck, you can exit the game by typing CTRL-c into your terminal.

Soon, your agent will solve not only tinyMaze,

but any maze you want.

Note that pacman.py supports a number of options

that can each be expressed in a long way (e.g., --layout) or a short way (e.g., -l). You can see the list of all options and their

default values via:

python pacman.py -hAlso, all of the commands that appear in this assignment also appear in commands.txt, for easy copying and pasting. In

UNIX/Mac OS X, you can even run all these commands in order with bash commands.txt.

New Syntax

You may not have seen this syntax before:

def my_function(a: int, b: Tuple[int, int], c: List[List], d: Any, e: float=1.0):This is annotating the type of the arguments that Python should expect for this function. In the example

below, a should be an int -- integer, b should be a tuple of 2 ints, c should be a List of Lists of anything -- therefore a 2D array of anything,

d is essentially the same as not annotated and can

by anything, and e should be a float. e is also set to 1.0 if nothing is passed in for it,

i.e.:

my_function(1, (2, 3), [['a', 'b'], [None, my_class], [[]]], ('h', 1))The above call fits the type annotations, and doesn't pass anything in for e. Type annotations are meant to be an adddition to the docstrings to help you know what the functions are working with. Python itself doesn't enforce these. When writing your own functions, it is up to you if you want to annotate your types; they may be helpful to keep organized or not something you want to spend time on.

Q1 (3 pts): Finding a Fixed Food Dot using Depth First Search

In searchAgents.py, you'll find a fully

implemented SearchAgent, which plans out a path

through Pacman's world and then executes that path step-by-step. The search algorithms for formulating a

plan are not implemented -- that's your job.

First, test that the SearchAgent is working

correctly by running:

python pacman.py -l tinyMaze -p SearchAgent -a fn=tinyMazeSearchThe command above tells the SearchAgent to use

tinyMazeSearch as its search algorithm, which is

implemented in search.py. Pacman should navigate

the maze successfully.

Now it's time to write full-fledged generic search functions to help Pacman plan routes! Pseudocode for the search algorithms you'll write can be found in the lecture slides. Remember that a search node must contain not only a state but also the information necessary to reconstruct the path (plan) which gets to that state.

Important note: All of your search functions need to return a list of actions that will lead the agent from the start to the goal. These actions all have to be legal moves (valid directions, no moving through walls).

Important note: Make sure to use the Stack, Queue and PriorityQueue data structures provided to you in util.py! These data structure implementations have

particular properties which are required for compatibility with the autograder.

Hint: Each algorithm is very similar. Algorithms for DFS, BFS, UCS, and A* differ only in the details of how the fringe is managed. So, concentrate on getting DFS right and the rest should be relatively straightforward. Indeed, one possible implementation requires only a single generic search method which is configured with an algorithm-specific queuing strategy. (Your implementation need not be of this form to receive full credit).

Implement the depth-first search (DFS) algorithm in the depthFirstSearch function in search.py. To make your algorithm complete, write the

graph search version of DFS, which avoids expanding any already visited states.

Your code should quickly find a solution for:

python pacman.py -l tinyMaze -p SearchAgent

python pacman.py -l mediumMaze -p SearchAgent

python pacman.py -l bigMaze -z .5 -p SearchAgent

The Pacman board will show an overlay of the states explored, and the order in which they were explored (brighter red means earlier exploration). Is the exploration order what you would have expected? Does Pacman actually go to all the explored squares on his way to the goal?

Hint: If you use a Stack as your data structure,

the solution found by your DFS algorithm for mediumMaze should have a length of 130 (provided you

push successors onto the fringe in the order provided by getSuccessors; you might get 246 if you push them

in the reverse order). Is this a least cost solution? If not, think about what depth-first search is doing

wrong.

Grading: Please run the below command to see if your implementation passes all the autograder test cases.

python autograder.py -q q1

Q2 (3 pts): Breadth First Search

Implement the breadth-first search (BFS) algorithm in the breadthFirstSearch function in search.py. Again, write a graph search algorithm that

avoids expanding any already visited states. Test your code the same way you did for depth-first search.

python pacman.py -l mediumMaze -p SearchAgent -a fn=bfs

python pacman.py -l bigMaze -p SearchAgent -a fn=bfs -z .5

Does BFS find a least cost solution? If not, check your implementation.

Hint: If Pacman moves too slowly for you, try the option -- frameTime 0.

Note: If you've written your search code generically, your code should work equally well for the eight-puzzle search problem without any changes.

python eightpuzzle.py

Grading: Please run the below command to see if your implementation passes all the autograder test cases.

python autograder.py -q q2

Q3 (3 pts): Varying the Cost Function

While BFS will find a fewest-actions path to the goal, we might want to find paths that are "best" in other

senses. Consider mediumDottedMaze and mediumScaryMaze.

By changing the cost function, we can encourage Pacman to find different paths. For example, we can charge more for dangerous steps in ghost-ridden areas or less for steps in food-rich areas, and a rational Pacman agent should adjust its behavior in response.

Implement the uniform-cost graph search algorithm in the uniformCostSearch function in search.py. We encourage you to look through util.py for some data structures that may be useful in

your implementation. You should now observe successful behavior in all three of the following layouts, where

the agents below are all UCS agents that differ only in the cost function they use (the agents and cost

functions are written for you):

python pacman.py -l mediumMaze -p SearchAgent -a fn=ucs

python pacman.py -l mediumDottedMaze -p StayEastSearchAgent

python pacman.py -l mediumScaryMaze -p StayWestSearchAgent

Note: You should get very low and very high path costs for the StayEastSearchAgent and StayWestSearchAgent respectively, due to their

exponential cost functions (see searchAgents.py

for details).

Grading: Please run the below command to see if your implementation passes all the autograder test cases.

python autograder.py -q q3

Q4 (3 pts): A* search

Implement A* graph search in the empty function aStarSearch in search.py. A* takes a heuristic function as an

argument. Heuristics take two arguments: a state in the search problem (the main argument), and the problem

itself (for reference information). The nullHeuristic heuristic function in search.py is a trivial example.

You can test your A* implementation on the original problem of finding a path through a maze to a fixed

position using the Manhattan distance heuristic (implemented already as manhattanHeuristic in searchAgents.py).

python pacman.py -l bigMaze -z .5 -p SearchAgent -a fn=astar,heuristic=manhattanHeuristic

You should see that A* finds the optimal solution slightly faster than uniform cost search (about 549 vs.

620 search nodes expanded in our implementation, but ties in priority may make your numbers differ

slightly). What happens on openMaze for the

various search strategies?

Grading: Please run the below command to see if your implementation passes all the autograder test cases.

python autograder.py -q q4

Q5 (3 pts): Finding All the Corners

The real power of A* will only be apparent with a more challenging search problem. Now, it's time to formulate a new problem and design a heuristic for it.

In corner mazes, there are four dots, one in each corner. Our new search problem is to find the shortest

path through the maze that touches all four corners (whether the maze actually has food there or not). Note

that for some mazes like tinyCorners, the shortest

path does not always go to the closest food first! Hint: the shortest path through tinyCorners takes 28 steps.

Note: Make sure to complete Question 2 before working on Question 5, because Question 5 builds upon your answer for Question 2.

Implement the CornersProblem search problem in

searchAgents.py. You will need to choose a state

representation that encodes all the information necessary to detect whether all four corners have been

reached. Now, your search agent should solve:

python pacman.py -l tinyCorners -p SearchAgent -a fn=bfs,prob=CornersProblem

python pacman.py -l mediumCorners -p SearchAgent -a fn=bfs,prob=CornersProblem

To receive full credit, you need to define an abstract state representation that does not encode irrelevant

information (like the position of ghosts, where extra food is, etc.). In particular, do not use a Pacman

GameState as a search state. Your code will be

very, very slow if you do (and also wrong).

An instance of the CornersProblem class

represents an entire search problem, not a particular state. Particular states are returned by the functions

you write, and your functions return a data structure of your choosing (e.g. tuple, set, etc.) that

represents a state.

Furthermore, while a program is running, remember that many states simultaneously exist, all on the queue

of the search algorithm, and they should be independent of each other. In other words, you should not have

only one state for the entire CornersProblem

object; your class should be able to generate many different states to provide to the search algorithm.

Hint 1: The only parts of the game state you need to reference in your implementation are the starting Pacman position and the location of the four corners.

Hint 2: When coding up getSuccessors,

make sure to add children to your successors list with a cost of 1.

Our implementation of breadthFirstSearch expands

just under 2000 search nodes on mediumCorners.

However, heuristics (used with A* search) can reduce the amount of searching required.

Grading: Please run the below command to see if your implementation passes all the autograder test cases.

python autograder.py -q q5

Q6 (3 pts): Corners Problem: Heuristic

Note: Make sure to complete Question 4 before working on Question 6, because Question 6 builds upon your answer for Question 4.

Implement a non-trivial, consistent heuristic for the CornersProblem in cornersHeuristic.

python pacman.py -l mediumCorners -p AStarCornersAgent -z 0.5

Note: AStarCornersAgent is a shortcut for

-p SearchAgent -a fn=aStarSearch,prob=CornersProblem,heuristic=cornersHeuristic

Admissibility vs. Consistency: Remember, heuristics are just functions that take search states and return numbers that estimate the cost to a nearest goal. More effective heuristics will return values closer to the actual goal costs. To be admissible, the heuristic values must be lower bounds on the actual shortest path cost to the nearest goal (and non-negative). To be consistent, it must additionally hold that if an action has cost c, then taking that action can only cause a drop in heuristic of at most c.

Remember that admissibility isn't enough to guarantee correctness in graph search -- you need the stronger condition of consistency. However, admissible heuristics are usually also consistent, especially if they are derived from problem relaxations. Therefore it is usually easiest to start out by brainstorming admissible heuristics. Once you have an admissible heuristic that works well, you can check whether it is indeed consistent, too. The only way to guarantee consistency is with a proof. However, inconsistency can often be detected by verifying that for each node you expand, its successor nodes are equal or higher in in f-value. Moreover, if UCS and A* ever return paths of different lengths, your heuristic is inconsistent. This stuff is tricky!

Non-Trivial Heuristics: The trivial heuristics are the ones that return zero everywhere (UCS) and the heuristic which computes the true completion cost. The former won't save you any time, while the latter will timeout the autograder. You want a heuristic which reduces total compute time, though for this assignment the autograder will only check node counts (aside from enforcing a reasonable time limit).

Grading: Your heuristic must be a non-trivial non-negative consistent heuristic to receive any points. Make sure that your heuristic returns 0 at every goal state and never returns a negative value. Depending on how few nodes your heuristic expands, you'll be graded:

| Number of nodes expanded | Grade |

|---|---|

| more than 2000 | 0/3 |

| at most 2000 | 1/3 |

| at most 1600 | 2/3 |

| at most 1200 | 3/3 |

Remember: If your heuristic is inconsistent, you will receive no credit, so be careful!

Grading: Please run the below command to see if your implementation passes all the autograder test cases.

python autograder.py -q q6

Q7 (4 pts): Eating All The Dots

Now we'll solve a hard search problem: eating all the Pacman food in as few steps as possible. For this,

we'll need a new search problem definition which formalizes the food-clearing problem: FoodSearchProblem in searchAgents.py (implemented for you). A solution is

defined to be a path that collects all of the food in the Pacman world. For the present assignment,

solutions

do not take into account any ghosts or power pellets; solutions only depend on the placement of walls,

regular food and Pacman. (Of course ghosts can ruin the execution of a solution! We'll get to that in the

next assignment.) If you have written your general search methods correctly, A* with a null heuristic

(equivalent to uniform-cost search) should quickly find an optimal solution to testSearch with no code change on your part (total

cost of 7).

python pacman.py -l testSearch -p AStarFoodSearchAgent

Note: AStarFoodSearchAgent is a shortcut for

-p SearchAgent -a fn=astar,prob=FoodSearchProblem,heuristic=foodHeuristic

You should find that UCS starts to slow down even for the seemingly simple tinySearch. As a reference, our implementation takes

2.5 seconds to find a path of length 27 after expanding 5057 search nodes.

Note: Make sure to complete Question 4 before working on Question 7, because Question 7 builds upon your answer for Question 4.

Fill in foodHeuristic in searchAgents.py with a consistent heuristic

for the FoodSearchProblem. Try your agent on the

trickySearch board:

python pacman.py -l trickySearch -p AStarFoodSearchAgent

Our UCS agent finds the optimal solution in about 13 seconds, exploring over 16,000 nodes.

Any non-trivial non-negative consistent heuristic will receive 1 point. Make sure that your heuristic returns 0 at every goal state and never returns a negative value. Depending on how few nodes your heuristic expands, you'll get additional points:

| Number of nodes expanded | Grade |

|---|---|

| more than 15000 | 1/4 |

| at most 15000 | 2/4 |

| at most 12000 | 3/4 |

| at most 9000 | 4/4 (full credit; medium) |

| at most 7000 | 5/4 (optional extra credit; hard) |

Remember: If your heuristic is inconsistent, you will receive no credit, so be careful! Can you solve mediumSearch in a short time? If so, we're either

very, very impressed, or your heuristic is inconsistent.

Grading: Please run the below command to see if your implementation passes all the autograder test cases.

python autograder.py -q q7

Q8 (3 pts): Suboptimal Search

Sometimes, even with A* and a good heuristic, finding the optimal path through all the dots is hard. In

these cases, we'd still like to find a reasonably good path, quickly. In this section, you'll write an agent

that always greedily eats the closest dot. ClosestDotSearchAgent is implemented for you in searchAgents.py, but it's missing a key function that

finds a path to the closest dot.

Implement the function findPathToClosestDot in

searchAgents.py. Our agent solves this maze

(suboptimally!) in under a second with a path cost of 350:

python pacman.py -l bigSearch -p ClosestDotSearchAgent -z .5

Hint: The quickest way to complete findPathToClosestDot is to fill in the AnyFoodSearchProblem, which is missing its goal test.

Then, solve that problem with an appropriate search function. The solution should be very short!

Your ClosestDotSearchAgent won't always find the

shortest possible path through the maze. Make sure you understand why and try to come up with a small

example where repeatedly going to the closest dot does not result in finding the shortest path for eating

all the dots.

Grading: Please run the below command to see if your implementation passes all the autograder test cases.

python autograder.py -q q8

Submission

In order to submit your assignment, please upload the following files to

Canvas -> Assignments -> Assignment 1: Search search.py and

searchAgents.py. Upload the files in a single zip

file with your UIN as the file name i.e., <your UIN>.zip.

Students will apply the search algorithms and problems implemented in A1 to handle more difficult scenarios that include controlling multiple pacman agents and planning under time constraints.

Overview

In this contest, you will apply the search algorithms and problems implemented in Assignment 1 to handle more difficult scenarios that include controlling multiple pacman agents and planning under time constraints. There is room to bring your own unique ideas, and there is no single set solution. Much looking forward to seeing what you come up with!

Grading

Grading is assigned according to the following key:

- +1 points per staff bot (3 in total) beaten in the final ranking

- +0.1 points per student bot beaten in the final ranking

- 1st place: +2 points

- 2nd and 3rd place: +1.5 points

Quick Start Guide

- Download all the code and supporting files

contest1.zip, unzip it, and change to the directory. - Copy your

search.pyfrom Assignment 1 into the minicontest1 directory (replacing the blanksearch.py). - Go into

myAgents.pyand fill outfindPathToClosestDotinClosestDotAgent, andisGoalStateinAnyFoodSearchProblem. You should be able to copy your solutions from Assignment 1 over. - Run

and you should be able to see 4 pacman agents travelling around the map collecting dots.

python pacman.py - Submit the

myAgents.pyfile to Contest 1 on Canvas.

Important! You only need to submit myAgents.py. If you import from search.py

or searchProblems.py, the autograder will use

the staff version of those files.

![]()

Introduction

The base code is nearly identical to Assignment 1, but with some minor modifications to include support for more than one Pacman agent. You can download all the code and supporting files as a zip archive. Some key differences:

- You will control

NPacman agents on the board at a time. Your code must be able to support a multiple number of agents. - There is a cost associate with how long Pacman "thinks" (compute cost). See Scoring for more details.

Files you'll edit:

myAgents.py: What will be submitted. Contains all of the code needed for your agent.

Files you might want to look at:

searchProblems.py: The same code as in A1, with some slight modifications.search.py: The same code as in A1.pacman.py: The main file that runs Pacman games. This file describes a Pacman GameState type, which you use in this contest.

Files to Edit and Submit: You will fill and submit myAgents.py

Evaluation: Your code will be autograded for technical correctness. Please do not change the names of any provided functions or classes within the code, or you will wreak havoc on the autograder. However, the correctness of your implementation -- not the autograder's judgements -- will be the final judge of your score. If necessary, we will review and grade assignments individually to ensure that you receive due credit for your work.

Academic Dishonesty: We will be checking your code against other submissions in the class for logical redundancy. If you copy someone else's code and submit it with minor changes, we will know. These cheat detectors are quite hard to fool, so please don't try. We trust you all to submit your own work only; please don't let us down. If you do, we will pursue the strongest consequences available to us.

Getting Help: You are not alone! If you find yourself stuck on something, contact the course staff for help. Office hours and the course Campuswire are there for your support; please use them. We want these assignments to be rewarding and instructional, not frustrating and demoralizing.

Discussion: Please be careful not to post spoilers.

Rules

Layout

There are a variety of layout in the layouts

directory. Agents will be exposed to a variety

of maps of different sizes and amounts of food.

Scoring

The scoring from Assignment 1 is maintained, with a few modifications

Kept from Assignment 1

- +10 for each food pellet eaten

- +500 for collecting all food pellets

Modifications

- -0.4 for each action taken (Assignment 1 penalized -1)

- -1 *

total compute used to calculate next action (in seconds)* 1000

Each agent also starts with 100 points.

Observations

Each agent can see the entire state of the game, such as

food pellet locations, all pacman locations, etc. See the GameState

section for more details.

Winning and Losing

Win: You win if you collect all food pellets. Your score is the current amount of points.

Lose: You lose if your score reaches zero. This can be caused by not finding pellets quickly enough, or spending too much time on compute. Your score for this game is zero. If your agent crashes, it automatically receives a score of zero.

Designing Agents

File Format

You should include your agents in a file of the same format as myAgents.py. Your agents must

be completely contained in this one file. Though, you may use the functions in

search.py.

Interface

The GameState in pacman.py should look familiar, but contains some

modifications to support multiple Pacman agents. The major change to note is that many GameState methods

now have an extra argument, agentIndex, which is

to identify which Pacman agent it needs. For

example, state.getPacmanPosition(0) will get the

position of the first pacman agent. For more

information,

see the GameState class inpacman.py.

Agent

To get started designing your own agent, we recommend subclassing the

Agent class in game.py

(this has already been done by default). This provides to one important variable, self.index,

which is the agentIndex of the current agent.

For example, if we have 2 agents, each agent

will be created with a unique index, [MyAgent(index=0), MyAgent(index=1)], that can be

used

when deciding on actions.

The autograder will call the createAgents

function to create your team of pacman. By

default, it is set to create N identical pacmen,

but you may modify the code to return a

diverse team of multiple types of agents

Getting Started

By default, the game runs with the ClosestDotAgent implemented in the Quick Start Guide. To run your own agent, change agent for

the createAgents

method in myAgents.py

python pacman.pyA wealth of options are available to you:

python pacman.py --helpTo run a game with your agent, do:

python pacman.py --pacman myAgents.pyLayouts

The layouts folder contains all of the test

cases that will be executed on the autograder. To see how you perform on

any single map, you can run

python pacman.py --layout test1.layTesting

You can run you agent on a single test by using the following command. See the layouts

folder for the full suite of tests. There are no hidden tests

python pacman.py --layout test1.layYou can run the autograder by running the command below. Your score may vary from the autograder due to differences between machines, as well as staff vs student search implementations.

python autograder.py --pacman myAgents.pyClassic Pacman is modeled as both an adversarial and a stochastic search problem. Students implement multiagent minimax and expectimax algorithms, as well as designing evaluation functions.

In this assignment, you will design agents for the classic version of Pacman, including ghosts. Along the way, you will implement both minimax and expectimax search and try your hand at evaluation function design.

The code base has not changed much from the previous assignment, but please start with a fresh installation, rather than intermingling files from assignment 1.

As in assignment 1, this assignment includes an autograder for you to grade your answers on your machine. This can be run on all questions with the command:

python autograder.py

It can be run for one particular question, such as q2, by:

python autograder.py -q q2

It can be run for one particular test by commands of the form:

python autograder.py -t test_cases/q2/0-small-tree

By default, the autograder displays graphics with the -t option, but doesn't with the -q option. You can force graphics by using the --graphics flag, or force no graphics by using the

--no-graphics flag.

See the autograder tutorial in Assignment 0 for more information about using the autograder.

The code for this assignment contains the following files, available as a zip archive.

| Files you'll edit: | |

multiAgents.py |

Where all of your multi-agent search agents will reside. |

| Files you might want to look at: | |

pacman.py |

The main file that runs Pacman games. This file also describes a Pacman GameState type, which you will use extensively in this assignment. |

game.py |

The logic behind how the Pacman world works. This file describes several supporting types like AgentState, Agent, Direction, and Grid. |

util.py |

Useful data structures for implementing search algorithms. You don't need to use these for this assignment, but may find other functions defined here to be useful. |

| Supporting files you can ignore: | |

graphicsDisplay.py |

Graphics for Pacman |

graphicsUtils.py |

Support for Pacman graphics |

textDisplay.py |

ASCII graphics for Pacman |

ghostAgents.py |

Agents to control ghosts |

keyboardAgents.py |

Keyboard interfaces to control Pacman |

layout.py |

Code for reading layout files and storing their contents |

autograder.py |

Assignment autograder |

testParser.py |

Parses autograder test and solution files |

testClasses.py |

General autograding test classes |

test_cases/ |

Directory containing the test cases for each question |

multiagentTestClasses.py |

Assignment 2 specific autograding test classes |

Files to Edit and Submit: You will fill in portions of multiAgents.py during the assignment. Once you have

completed the assignment, you will submit ONLY these files to Canvas zipped into a single

file.

Evaluation: Your code will be autograded for technical correctness. Please do not change the names of any provided functions or classes within the code, or you will wreak havoc on the autograder. However, the correctness of your implementation -- not the autograder's judgements -- will be the final judge of your score. If necessary, we will review and grade assignments individually to ensure that you receive due credit for your work.

Academic Dishonesty: We will be checking your code against other submissions in the class for logical redundancy. If you copy someone else's code and submit it with minor changes, we will know. These cheat detectors are quite hard to fool, so please don't try. We trust you all to submit your own work only; please don't let us down. If you do, we will pursue the strongest consequences available to us.

Getting Help: You are not alone! If you find yourself stuck on something, contact the course staff for help. Office hours and the course Campuswire are there for your support; please use them. We want these assignments to be rewarding and instructional, not frustrating and demoralizing.

Discussion: Please be careful not to post spoilers.

Welcome to Multi-Agent Pacman

First, play a game of classic Pacman by running the following command:

python pacman.py

and using the arrow keys to move. Now, run the provided ReflexAgent in multiAgents.py

python pacman.py -p ReflexAgent

Note that it plays quite poorly even on simple layouts:

python pacman.py -p ReflexAgent -l testClassic

Inspect its code (in multiAgents.py) and make

sure

you understand what it's doing.

Q1 (4 pts): Reflex Agent

Improve the ReflexAgent in multiAgents.py to play respectably. The provided

reflex

agent code provides some helpful examples of methods that query the GameState for information. A capable reflex agent will

have to consider both food locations and ghost locations to perform well. Your agent should easily and

reliably clear the testClassic layout:

python pacman.py -p ReflexAgent -l testClassic

Try out your reflex agent on the default mediumClassic layout with one ghost or two (and

animation off to speed up the display):

python pacman.py --frameTime 0 -p ReflexAgent -k 1

python pacman.py --frameTime 0 -p ReflexAgent -k 2

How does your agent fare? It will likely often die with 2 ghosts on the default board, unless your evaluation function is quite good.

Note: Remember that newFood has the

function asList()

Note: As features, try the reciprocal of important values (such as distance to food) rather than just the values themselves.

Note: The evaluation function you're writing is evaluating state-action pairs; in later parts of the assignment, you'll be evaluating states.

Note: You may find it useful to view the internal contents of various objects for debugging. You

can

do this by printing the objects' string representations. For example, you can print newGhostStates with print(newGhostStates).

Options: Default ghosts are random; you can also play for fun with slightly smarter directional ghosts

using

-g DirectionalGhost. If the randomness is

preventing

you from telling whether your agent is improving, you can use -f to run with a fixed random seed (same random

choices

every game). You can also play multiple games in a row with -n. Turn off graphics with -q to run lots of games quickly.

Grading: We will run your agent on the openClassic layout 10 times. You will receive 0 points

if your agent times out, or never wins. You will receive 1 point if your agent wins at least 5 times, or 2

points if your agent wins all 10 games. You will receive an additional 1 point if your agent's average score

is greater than 500, or 2 points if it is greater than 1000. You can try your agent out under these

conditions

with

python autograder.py -q q1

To run it without graphics, use:

python autograder.py -q q1 --no-graphics

Don't spend too much time on this question, though, as the meat of the assignment lies ahead.

Q2 (5 pts): Minimax

Now you will write an adversarial search agent in the provided MinimaxAgent class stub in multiAgents.py. Your minimax agent should work with

any

number of ghosts, so you'll have to write an algorithm that is slightly more general than what you've

previously seen in lecture. In particular, your minimax tree will have multiple min layers (one for each

ghost) for every max layer.

Your code should also expand the game tree to an arbitrary depth. Score the leaves of your minimax tree

with

the supplied self.evaluationFunction, which

defaults

to scoreEvaluationFunction. MinimaxAgent extends MultiAgentSearchAgent, which gives access to self.depth and self.evaluationFunction. Make sure your minimax code

makes reference to these two variables where appropriate as these variables are populated in response to

command line options.

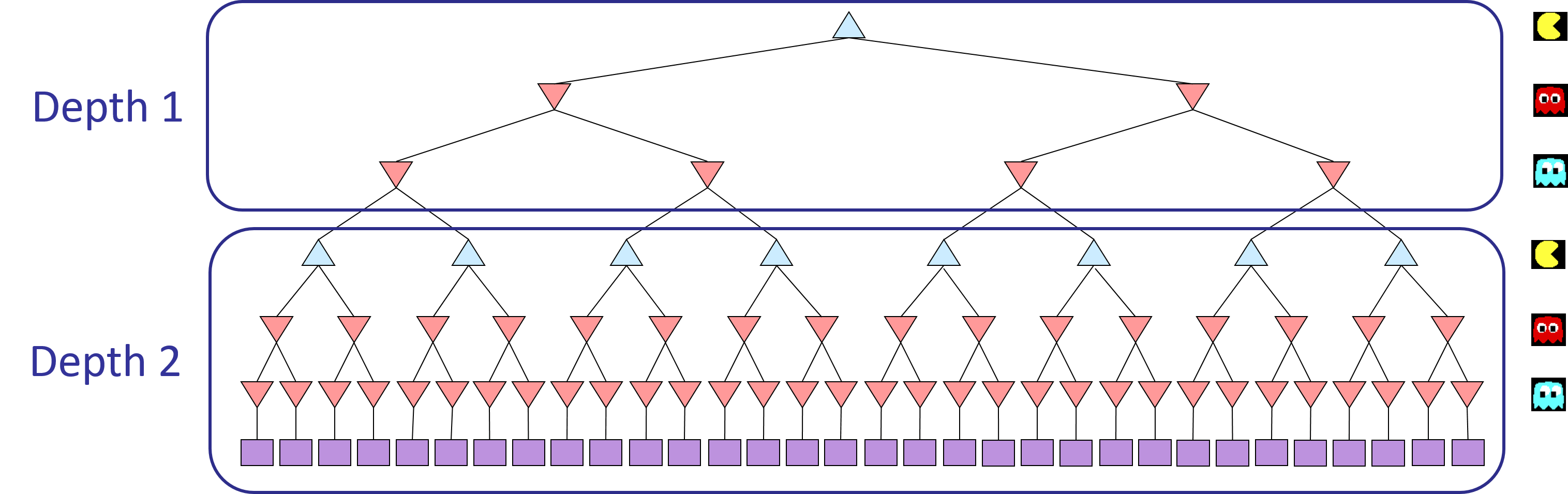

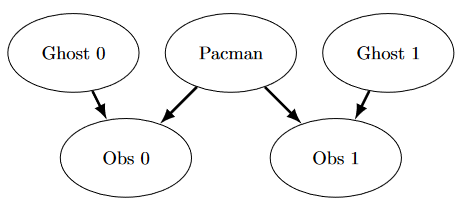

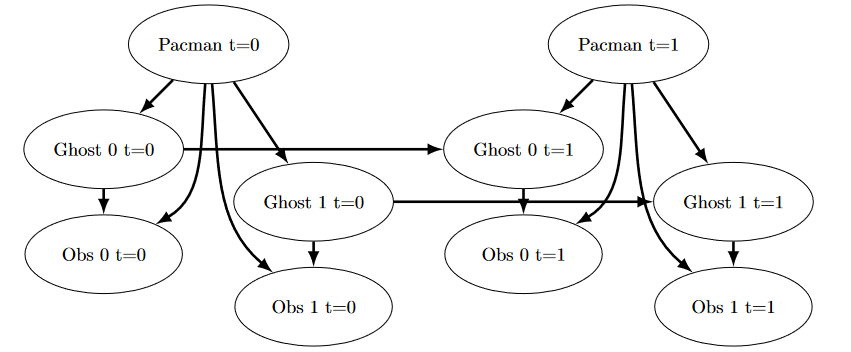

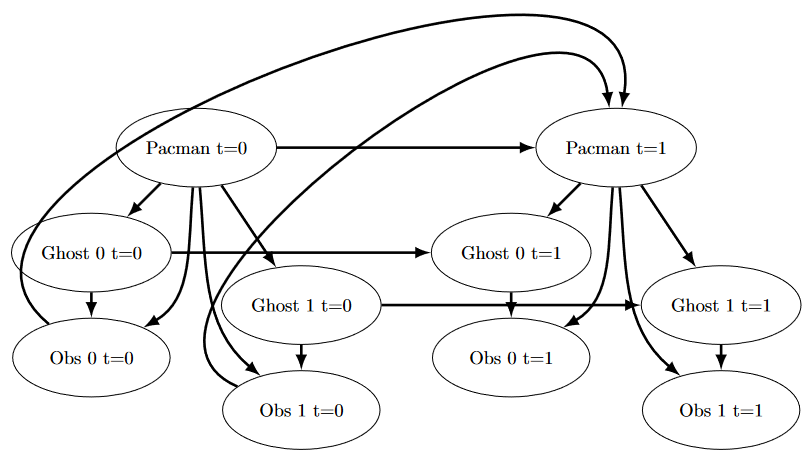

Important: A single search ply is considered to be one Pacman move and all the ghosts' responses, so depth 2 search will involve Pacman and each ghost moving two times (see diagram below).

Grading: We will be checking your code to determine whether it explores the correct number of game

states. This is the only reliable way to detect some very subtle bugs in implementations of minimax. As a

result, the autograder will be very picky about how many times you call GameState.generateSuccessor. If you call it any more

or

less than necessary, the autograder will complain. To test and debug your code, run

python autograder.py -q q2

This will show what your algorithm does on a number of small trees, as well as a pacman game. To run it without graphics, use:

python autograder.py -q q2 --no-graphics

Hints and Observations

- Implement the algorithm recursively using helper function(s).

- The correct implementation of minimax will lead to Pacman losing the game in some tests. This is not a problem: as it is correct behaviour, it will pass the tests.

- The evaluation function for the Pacman test in this part is already written (

self.evaluationFunction). You shouldn't change this function, but recognize that now we're evaluating states rather than actions, as we were for the reflex agent. Look-ahead agents evaluate future states whereas reflex agents evaluate actions from the current state. - The minimax values of the initial state in the

minimaxClassiclayout are 9, 8, 7, -492 for depths 1, 2, 3 and 4 respectively. Note that your minimax agent will often win (665/1000 games for us) despite the dire prediction of depth 4 minimax.python pacman.py -p MinimaxAgent -l minimaxClassic -a depth=4 - Pacman is always agent 0, and the agents move in order of increasing agent index.

- All states in minimax should be

GameStates, either passed in togetActionor generated viaGameState.generateSuccessor. In this assignment, you will not be abstracting to simplified states. - On larger boards such as

openClassicandmediumClassic(the default), you'll find Pacman to be good at not dying, but quite bad at winning. He'll often thrash around without making progress. He might even thrash around right next to a dot without eating it because he doesn't know where he'd go after eating that dot. Don't worry if you see this behavior, question 5 will clean up all of these issues. - When Pacman believes that his death is unavoidable, he will try to end the game as soon as possible

because of the constant penalty for living. Sometimes, this is the wrong thing to do with random ghosts,

but

minimax agents always assume the worst:

python pacman.py -p MinimaxAgent -l trappedClassic -a depth=3Make sure you understand why Pacman rushes the closest ghost in this case.

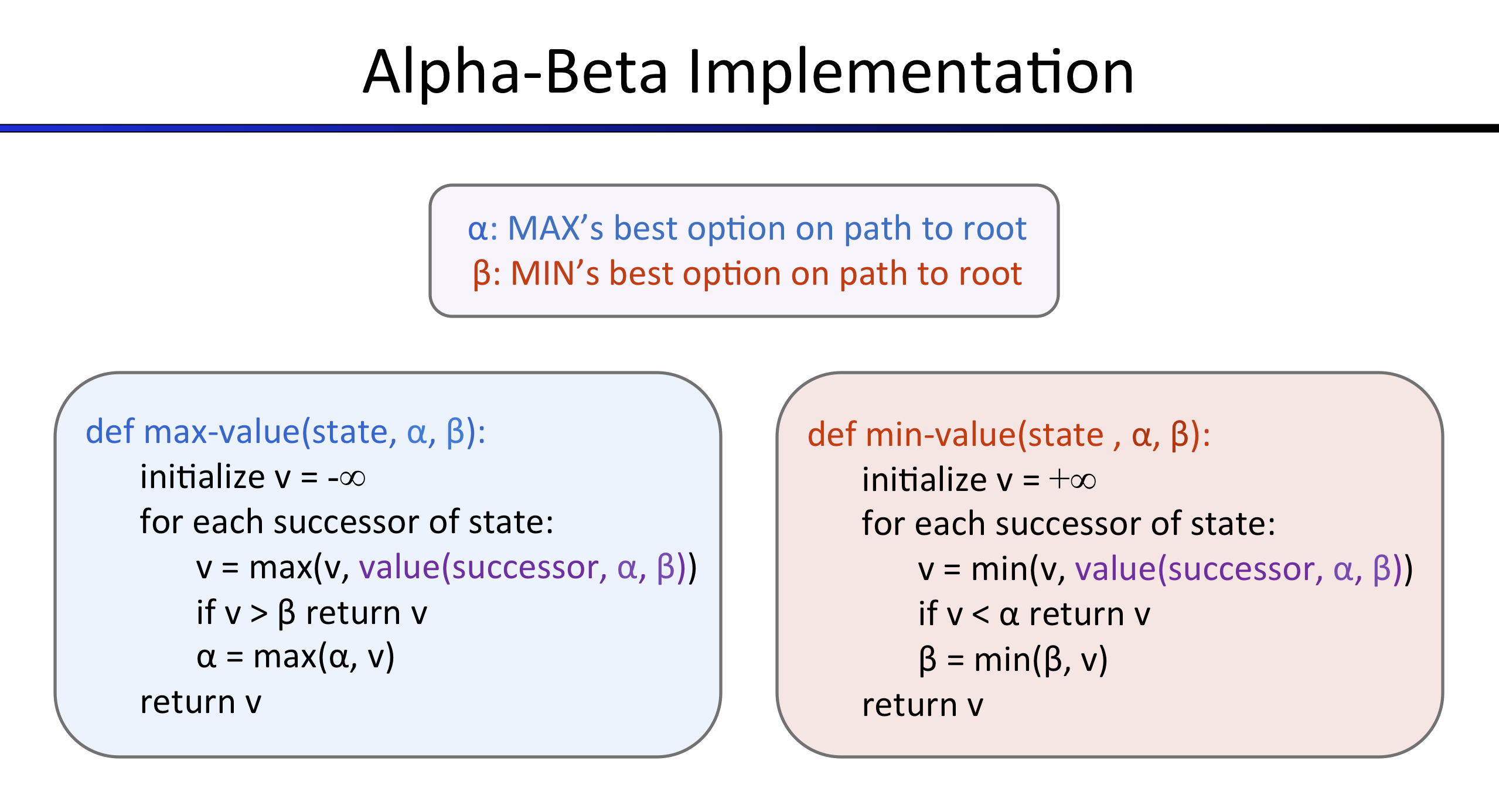

Q3 (5 pts): Alpha-Beta Pruning

Make a new agent that uses alpha-beta pruning to more efficiently explore the minimax tree, in AlphaBetaAgent. Again, your algorithm will be slightly

more general than the pseudocode from lecture, so part of the challenge is to extend the alpha-beta pruning

logic appropriately to multiple minimizer agents.

You should see a speed-up (perhaps depth 3 alpha-beta will run as fast as depth 2 minimax). Ideally, depth

3

on smallClassic should run in just a few seconds

per

move or faster.

python pacman.py -p AlphaBetaAgent -a depth=3 -l smallClassic

The AlphaBetaAgent minimax values should be

identical to the MinimaxAgent minimax values,

although the actions it selects can vary because of different tie-breaking behavior. Again, the minimax

values

of the initial state in the minimaxClassic layout

are 9, 8, 7 and -492 for depths 1, 2, 3 and 4 respectively.

Grading: Because we check your code to determine whether it explores the correct number of states,

it is important that you perform alpha-beta pruning without reordering children. In other words, successor

states should always be processed in the order returned by GameState.getLegalActions. Again, do not call GameState.generateSuccessor more than necessary.

You must not prune on equality in order to match the set of states explored by our autograder. (Indeed, alternatively, but incompatible with our autograder, would be to also allow for pruning on equality and invoke alpha-beta once on each child of the root node, but this will not match the autograder.)

The pseudo-code below represents the algorithm you should implement for this question.

To test and debug your code, run

python autograder.py -q q3

This will show what your algorithm does on a number of small trees, as well as a pacman game. To run it without graphics, use:

python autograder.py -q q3 --no-graphics

The correct implementation of alpha-beta pruning will lead to Pacman losing some of the tests. This is not a problem: as it is correct behaviour, it will pass the tests.

Q4 (5 pts): Expectimax

Minimax and alpha-beta are great, but they both assume that you are playing against an adversary who makes

optimal decisions. As anyone who has ever won tic-tac-toe can tell you, this is not always the case. In this

question you will implement the ExpectimaxAgent,

which is useful for modeling probabilistic behavior of agents who may make suboptimal choices.

As with the search and problems yet to be covered in this class, the beauty of these algorithms is their general applicability. To expedite your own development, we've supplied some test cases based on generic trees. You can debug your implementation on small the game trees using the command:

python autograder.py -q q4

Debugging on these small and manageable test cases is recommended and will help you to find bugs quickly.

Once your algorithm is working on small trees, you can observe its success in Pacman. Random ghosts are of

course not optimal minimax agents, and so modeling them with minimax search may not be appropriate. ExpectimaxAgent will no longer take the min over all

ghost actions, but the expectation according to your agent's model of how the ghosts act. To simplify your

code, assume you will only be running against an adversary which chooses amongst their getLegalActions uniformly at random.

To see how the ExpectimaxAgent behaves in Pacman, run:

python pacman.py -p ExpectimaxAgent -l minimaxClassic -a depth=3

You should now observe a more cavalier approach in close quarters with ghosts. In particular, if Pacman perceives that he could be trapped but might escape to grab a few more pieces of food, he'll at least try. Investigate the results of these two scenarios:

python pacman.py -p AlphaBetaAgent -l trappedClassic -a depth=3 -q -n 10

python pacman.py -p ExpectimaxAgent -l trappedClassic -a depth=3 -q -n 10

You should find that your ExpectimaxAgent wins

about half the time, while your AlphaBetaAgent

always loses. Make sure you understand why the behavior here differs from the minimax case.

The correct implementation of expectimax will lead to Pacman losing some of the tests. This is not a problem: as it is correct behaviour, it will pass the tests.

Q5 (6 pts): Evaluation Function

Write a better evaluation function for Pacman in the provided function betterEvaluationFunction. The evaluation function

should

evaluate states, rather than actions like your reflex agent evaluation function did. With depth 2 search,

your

evaluation function should clear the smallClassic

layout with one random ghost more than half the time and still run at a reasonable rate (to get full credit,

Pacman should be averaging around 1000 points when he's winning).

Grading: the autograder will run your agent on the smallClassic layout 10 times. We will assign points to your evaluation function in the following way:

- If you win at least once without timing out the autograder, you receive 1 points. Any agent not satisfying these criteria will receive 0 points.

- +1 for winning at least 5 times, +2 for winning all 10 times

- +1 for an average score of at least 500, +2 for an average score of at least 1000 (including scores on lost games)

- +1 if your games take on average less than 30 seconds on the autograder machine, when run with

--no-graphics. - The additional points for average score and computation time will only be awarded if you win at least 5 times.

You can try your agent out under these conditions with

python autograder.py -q q5

To run it without graphics, use:

python autograder.py -q q5 --no-graphics

Students will implement an agent for playing the repeated prisoner dilemma game.

Overview

In this contest, you will implement an agent for playing the

repeated prisoner dilemma game. This game has a twist in the form

of uncertainty regarding the reported opponent action from the

previous round. The contest code is available as a zip

archive (contest2.zip).

There is room to bring your own unique ideas, and there is no single set solution. We look forward to seeing what you come up with!

Quick Start Guide

- Download the code (

contest2.zip), unzip it, and change to the directory. - Go into

Players/MyPlayer.pyand fill out your UINs separated by ‘:' (not by ‘,') in the "UIN" field, choose a team name at "your team name", Write your strategy under the "play" function. Your strategy should take into account the opponent's previous action and level of uncertainty regarding the previous action report. - Run

, for each pair of players in the Players folder, 1000 rounds will be played on expectation (there is a 0.001 e^(-0.001) chance of terminating on every round).

python Game.py - Submit

MyPlayer.pyzipped into a single file to Contest 2: Game Theory Contest on Canvas.

Grading

Each submission will be evaluated against all other submissions, including the three staff bots.

Submissions will be ranked based on their accumulated score.

Grading follows this system:

- +1 point for each staff bot beaten in the final ranking (Tough Guy, Nice Guy, Tit for Tat)

- +0.1 points for each student bot beaten in the final ranking

- 1st place: +2 points

- 2nd and 3rd place: +1.5 points

Introduction

You are required to design an agent that plays the prisoner dilemma repeatedly against the same opponent. Your agent should attempt to maximize its utility where. As we saw in class, the Nash equilibrium for this game results in low utility for both players. You should attempt to coordinate a better outcome over repeated rounds.

External libraries: in this contest, you are allowed to use NumPy as a dependency.

| Files you'll edit: | |

MyPlayer.py |

Contains all of the code needed for defining your agent. |

| Files you might want to look at: | |

Player.py |

The abstract class for player (your player must inherit from this class). |

Game.py |

The tournament execution. |

Files to Edit and Submit: You will fill and submit MyPlayer.py.

Academic Dishonesty: We will be checking your code against other submissions in the class for logical redundancy. If you copy someone else's code and submit it with minor changes, we will know. These cheat detectors are quite hard to fool, so please don't try. We trust you all to submit your own work only; please don't let us down. If you do, we will pursue the strongest consequences available to us.

Getting Help: You are not alone! If you find yourself stuck on something, contact the course staff for help. Office hours and the course Campuswire are there for your support; please use them. If you can't make our office hours, let us know and we will schedule more. We want these contests to be rewarding and instructional, not frustrating and demoralizing. But, we don't know when or how to help unless you ask.

Discussion: Please be careful not to post spoilers.

Rules

Layout

Once you run Game.py, a tournament will take place during which every pair of players, defined in the Players directory, will face each other. Each match will consist of 1000 rounds in expectancy.

Scoring

For each round in a single match you will receive -1 (i.e., 1 year in jail) points if you confess while your opponent is silent, -4 if you both confess, -5 if you are silent while your opponent confesses, and -2 if you both keep silent. Your average score from a single match will be summed over all matches to determine your overall ranking. Your final score will be determined based on your overall rank. Based to the grading policy provided above.

Designing Agents

File Format

You should include your agents in a file of the same format as MyPlayer.py. Your agents must

be completely contained in this one file.

Agent

Your agent should attempt to exploit naïve opponents, not being exploited by aggressive opponent, and encourage opponent cooperation when appropriate. The best strategy depends on the strategy implemented by your opponent. Try to design an agent that can adapt to different opponents. Remember that your goal is not to win single matches but to gain the maximal score over all matches. That is, even if you are able to outperform the staff bots in a single match, you might still get an overall score that is lower because the overall scores are summed over all matches.

Getting Started

Implement a simple agent. For instance, one that always returns

"confess". Now run Game.py. A tournament will

run matching your

player with all three staff bots. The tournament outcome (final

scores) will be written to results.csv. You

should now attempt to

improve your agent's strategy to the best of your understanding.

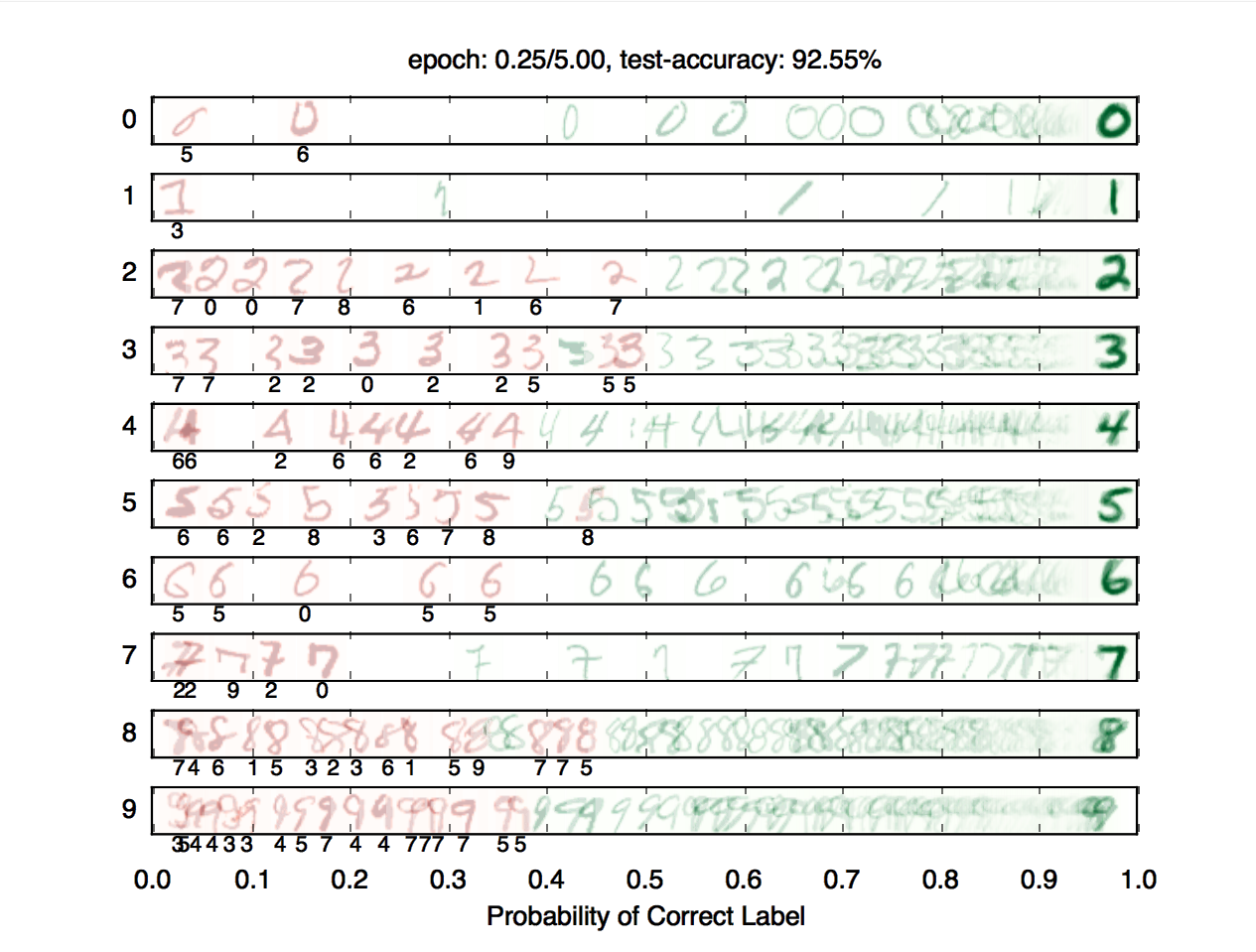

Students implement Value Function, Q learning, and Approximate Q learning to help pacman and crawler agents learn rational policies.

In this assignment, you will implement value iteration and Q-learning. You will test your agents first on Gridworld (from class), then apply them to a simulated robot controller (Crawler) and Pacman.

As in previous assignments, this assignment includes an autograder for you to grade your solutions on your machine. This can be run on all questions with the command:

python autograder.py

It can be run for one particular question, such as q2, by:

python autograder.py -q q2

It can be run for one particular test by commands of the form:

python autograder.py -t test_cases/q2/1-bridge-grid

The code for this assignment contains the following files, available as a zip archive.

| Files you'll edit: | |

valueIterationAgents.py |

A value iteration agent for solving known MDPs. |

qlearningAgents.py |

Q-learning agents for Gridworld, Crawler and Pacman. |

analysis.py |

A file to put your answers to questions given in the assignment. |

| Files you might want to look at: | |

mdp.py |

Defines methods on general MDPs. |

learningAgents.py |

Defines the base classes ValueEstimationAgent and QLearningAgent, which

your agents will extend. |

util.py |

Utilities, including util.Counter, which is particularly useful for Q-learners. |

gridworld.py |

The Gridworld implementation. |

featureExtractors.py |

Classes for extracting features on (state, action) pairs. Used for the approximate Q-learning

agent (in qlearningAgents.py). |

| Supporting files you can ignore: | |

environment.py |

Abstract class for general reinforcement learning environments. Used by gridworld.py.

|

graphicsGridworldDisplay.py |

Gridworld graphical display. |

graphicsUtils.py |

Graphics utilities. |

textGridworldDisplay.py |

Plug-in for the Gridworld text interface. |

crawler.py |

The crawler code and test harness. You will run this but not edit it. |

graphicsCrawlerDisplay.py |

GUI for the crawler robot. |

autograder.py |

Assignment autograder |

testParser.py |

Parses autograder test and solution files |

testClasses.py |

General autograding test classes |

test_cases/ |

Directory containing the test cases for each question |

reinforcementTestClasses.py |

Assignment 3 specific autograding test classes |

Files to Edit and Submit: You will fill in portions of valueIterationAgents.py, qlearningAgents.py, and analysis.py during the assignment. Once you have

completed the assignment, you will submit ONLY these files to Canvas zipped into a single

file.

Please DO NOT change/submit any other files in this distribution.

Evaluation: Your code will be autograded for technical correctness. Please do not change the names of any provided functions or classes within the code, or you will wreak havoc on the autograder. However, the correctness of your implementation – not the autograder's judgements – will be the final judge of your score. If necessary, we will review and grade assignments individually to ensure that you receive due credit for your work.

Academic Dishonesty: We will be checking your code against other submissions in the class for logical redundancy. If you copy someone else's code and submit it with minor changes, we will know. These cheat detectors are quite hard to fool, so please don't try. We trust you all to submit your own work only; please don't let us down. If you do, we will pursue the strongest consequences available to us.

Getting Help: You are not alone! If you find yourself stuck on something, contact the course staff for help. Office hours and the course Campuswire are there for your support; please use them. We want these assignments to be rewarding and instructional, not frustrating and demoralizing.

Discussion: Please be careful not to post spoilers.

MDPs

To get started, run Gridworld in manual control mode, which uses the arrow keys:

python gridworld.py -m

You will see the two-exit layout from class. The blue dot is the agent. Note that when you press up, the agent only actually moves north 80% of the time. Such is the life of a Gridworld agent!

You can control many aspects of the simulation. A full list of options is available by running:

python gridworld.py -h

The default agent moves randomly

python gridworld.py -g MazeGrid

You should see the random agent bounce around the grid until it happens upon an exit. Not the finest hour for an AI agent.

Note: The Gridworld MDP is such that you first must enter a pre-terminal state (the double boxes shown in

the GUI) and then take the special ‘exit' action before the episode actually ends (in the true terminal

state called TERMINAL_STATE, which is not shown

in

the GUI). If you run an episode manually, your total return may be less than you expected, due to the

discount rate (-d to change; 0.9 by default).

Look at the console output that accompanies the graphical output (or use -t for all text). You will be told about each

transition the agent experiences (to turn this off, use -q).

As in Pacman, positions are represented by (x, y)

Cartesian coordinates and any arrays are indexed by [x][y], with 'north' being the direction of increasing y, etc. By default, most transitions will receive a

reward of zero, though you can change this with the living reward option (-r).

Q1 (5 points): Value Iteration

Recall the value iteration state update equation:

\[V_{k+1}(s) \leftarrow \max_a \sum_{s'} T(s,a,s')\left[R(s,a,s') + \gamma V_k(s')\right]\]Write a value iteration agent in ValueIterationAgent, which has been partially

specified for you in valueIterationAgents.py.

Your

value iteration agent is an offline planner, not a reinforcement learning agent, and so the relevant

training option is the number of iterations of value iteration it should run (option -i) in its initial planning phase. ValueIterationAgent takes an MDP on construction and

runs value iteration for the specified number of iterations before the constructor returns.

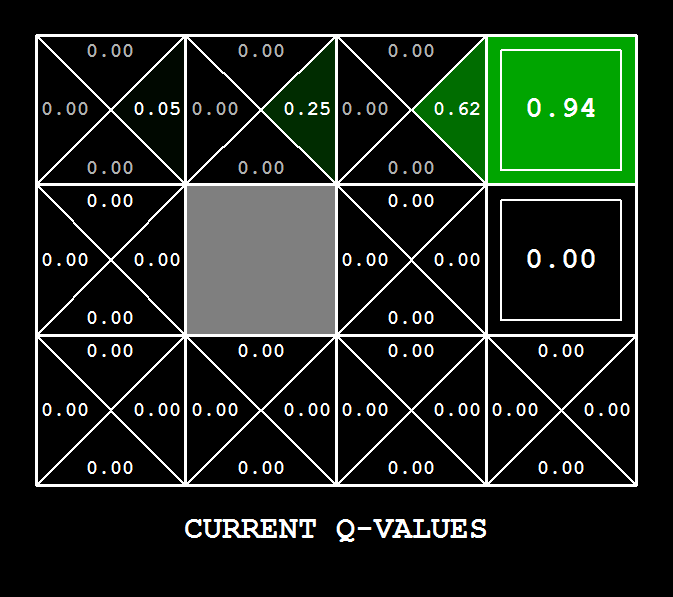

Value iteration computes \(k\)-step estimates of the optimal values, \(V_k\). In addition to runValueIteration, implement the following methods

for

ValueIterationAgent using \(V_k\):

computeActionFromValues(state)computes the best action according to the value function given by self.values.computeQValueFromValues(state, action)returns the Q-value of the (state, action) pair given by the value function given byself.values.

These quantities are all displayed in the GUI: values are numbers in squares, Q-values are numbers in square quarters, and policies are arrows out from each square.

Important: Use the "batch" version of value iteration where each vector \(V_k\) is computed from a fixed vector \(V_{k−1}\) (like in lecture), not the "online" version where one single weight vector is updated in place. This means that when a state's value is updated in iteration \(k\) based on the values of its successor states, the successor state values used in the value update computation should be those from iteration \(k−1\) (even if some of the successor states had already been updated in iteration \(k\)). The difference is discussed in Sutton & Barto in Chapter 4.1 on page 91.

Note: A policy synthesized from values of depth \(k\) (which reflect the next \(k\) rewards) will actually reflect the next \(k+1\) rewards (i.e. you return \(\pi_{k+1}\)). Similarly, the Q-values will also reflect one more reward than the values (i.e. you return \(Q_{k+1}\)).

You should return the synthesized policy \(\pi_{k+1}\).

Hint: You may optionally use the util.Counter class in util.py, which is a dictionary with a default value

of

zero. However, be careful with argMax: the

actual

argmax you want may be a key not in the counter!

Note: Make sure to handle the case when a state has no available actions in an MDP (think about what this means for future rewards).

To test your implementation, run the autograder:

python autograder.py -q q1

The following command loads your ValueIterationAgent, which will compute a policy and

execute it 10 times. Press a key to cycle through values, Q-values, and the simulation. You should find

that

the value of the start state (V(start), which

you

can read off of the GUI) and the empirical resulting average reward (printed after the 10 rounds of

execution finish) are quite close.

python gridworld.py -a value -i 100 -k 10

Hint: On the default BookGrid, running

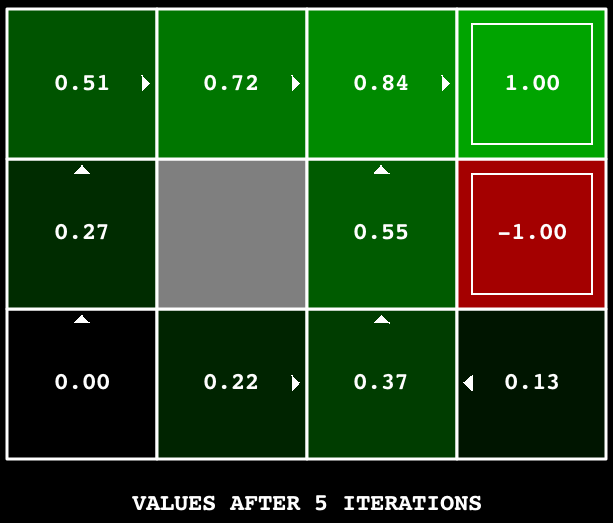

value iteration for 5 iterations should give you this output:

python gridworld.py -a value -i 5

Grading: Your value iteration agent will be graded on a new grid. We will check your values, Q-values, and policies after fixed numbers of iterations and at convergence (e.g. after 100 iterations).

Q2 (1 point): Bridge Crossing Analysis

BridgeGrid is a grid world map with the a

low-reward terminal state and a high-reward terminal state separated by a narrow "bridge", on either side

of

which is a chasm of high negative reward. The agent starts near the low-reward state. With the default

discount of 0.9 and the default noise of 0.2, the optimal policy does not cross the bridge. Change only

ONE

of the discount and noise parameters so that the optimal policy causes the agent to attempt to cross the

bridge. Put your answer in question2() of analysis.py. (Noise refers to how often an agent

ends

up in an unintended successor state when they perform an action.) The default corresponds to:

python gridworld.py -a value -i 100 -g BridgeGrid --discount 0.9 --noise 0.2

Grading: We will check that you only changed one of the given parameters, and that with this change, a correct value iteration agent should cross the bridge. To check your answer, run the autograder:

python autograder.py -q q2

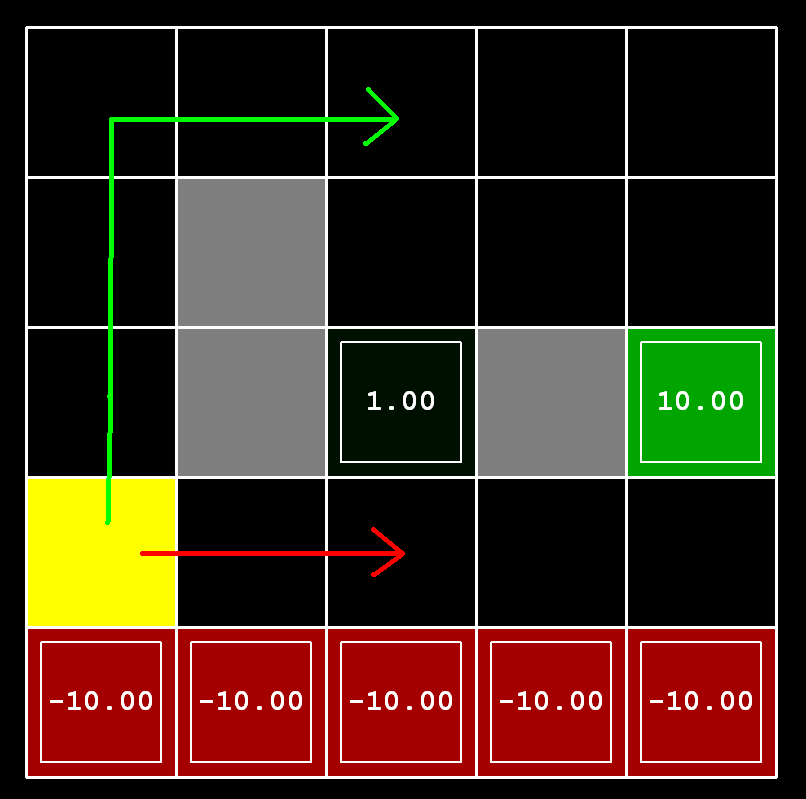

Q3 (6 points): Policies

Consider the DiscountGrid layout, shown below.

This grid has two terminal states with positive payoff (in the middle row), a close exit with payoff +1

and

a distant exit with payoff +10. The bottom row of the grid consists of terminal states with negative

payoff

(shown in red); each state in this "cliff" region has payoff -10. The starting state is the yellow square.

We distinguish between two types of paths: (1) paths that "risk the cliff" and travel near the bottom row

of

the grid; these paths are shorter but risk earning a large negative payoff, and are represented by the red

arrow in the figure below. (2) paths that "avoid the cliff" and travel along the top edge of the grid.

These

paths are longer but are less likely to incur huge negative payoffs. These paths are represented by the

green arrow in the figure below.

In this question, you will choose settings of the discount, noise, and living reward parameters for this

MDP to produce optimal policies of several different types. Your setting of the parameter values

for

each part should have the property that, if your agent followed its optimal policy without being subject

to any noise, it would exhibit the given behavior. If a particular behavior is not achieved for

any setting of the parameters, assert that the policy is impossible by returning the string 'NOT POSSIBLE'.

Here are the optimal policy types you should attempt to produce:

- Prefer the close exit (+1), risking the cliff (-10)

- Prefer the close exit (+1), but avoiding the cliff (-10)

- Prefer the distant exit (+10), risking the cliff (-10)