FACIAL ACTION CODING SYSTEM WITH BLENDSHAPES

Zachary Thoms

CSCE 489 Computer Animation Final Project

Inspiration: This project was largely inspired by project 3 and the website IMOTIONS. I personally found these topics the most interesting in the course and felt

the most like an animator when doing this project. I was also intrigued by how delta vectors worked with base meshes to actually implement the blendshape

concept, so this is where I wanted to dive deeper into for my final project.

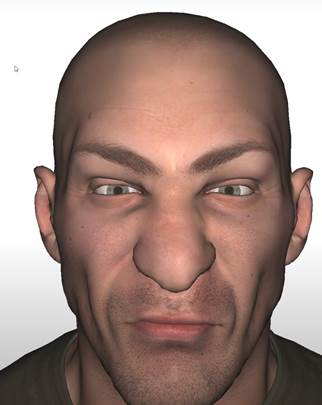

Techniques: The primary technique I explored and implanted was blendshapes. Blendshapes are basically different poses of a base mesh (that has a base blenshape)

that are combined in different ways to create various animations. My primary use for blendshapes was to implement the facial action coding system as shown on

|

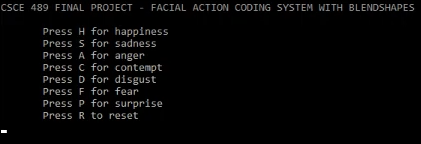

IMOTIONS to ultimately display 7 different human emotions, and then tie them to various hotkeys to switch between in my c++ project. The 7 different emotions

included happiness, sadness, contempt, fear, disgust, anger and surprise and each were comprised of various “action units” which are essentially individual muscle

movements in the face.

As secondary goals for the project, I wanted to incorporate eye movement and jaw movement to further enhance the realism of each different emotion displayed on

the face. I ended up not being able to find a way to easily manipulate the jaw in a way that looked realistic enough, so I had to cut that from the project. Since eyes were

essentially just spheres however, they were easy enough to move with each different expression to give a lot more life to each of the different faces.

In order to make this all happen, I used assignment 3 as my guide and starting point. My first step was to dive into Maya to create each of the different blendshape poses

on the Victor model from Faceware Technologies. Once I had all of them created, I used the same methods from assignment 3 to import the meshes and use them with

OpenGL to render them on the screen. I started with the base mesh and assigned hotkeys to each of the different emotions which allows the user to freely view each

different emotion.

|

|||||

|

|

||||

|

References:

https://imotions.com/blog/facial-action-coding-system/

https://people.engr.tamu.edu/sueda/courses/CSCE489/2021F/assignments/A3/index.html

https://facewaretech.com/learn/free-assets/

Video Demo:

https://www.youtube.com/watch?v=eBV652qIm5w