Environment mapping is an image-based lighting technique where we can approxiamte the appearnce of a reflective or refractive surface using a texture image. In the case of a 3D world, this can be done using a type of texture called a

cubemap.

What are cubemaps?

A cubemap is essentially a box made out of six 2D textures, which can be provided to the program or generated at run-time.

A cubemap made out of provided textures are called

static cubemaps, whereas textures provided at run-time are called

dynamic cubemaps.

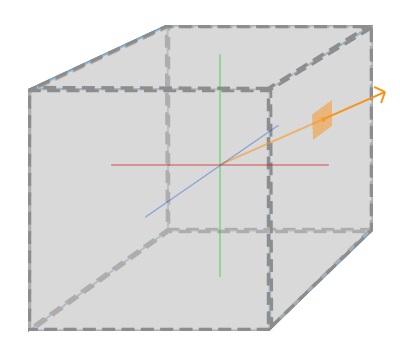

After providing a cubemap to the program, the fragment shader computes a normalized direction vector from the origin of the object, and the first fragment color it finds is used to color the object's fragment. The image below illustrates this concept.

Creating a static cubemap

In OpenGL, there is a enum called

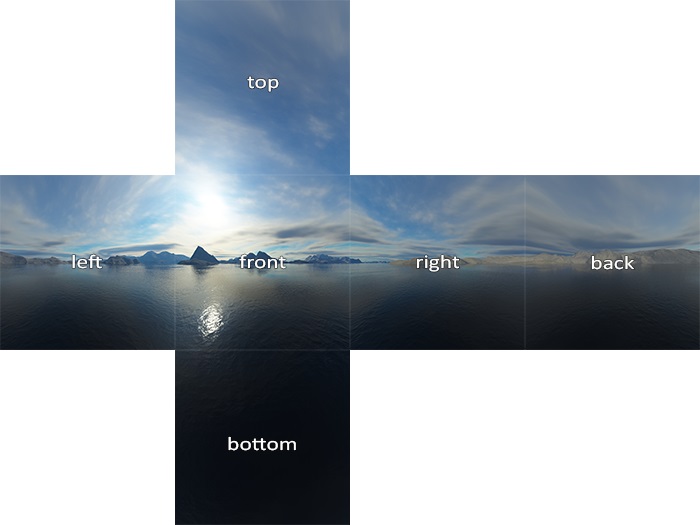

GL_TEXTURE_CUBE_MAP. When binded to newly generated texture, this informs OpenGL that we're going to create a cubemap. We assign the images to each side of the cubemap using the

GL_TEXTURE_CUBE_MAP_POSITIVE_X enum, where positive x is the image on the "right" face of the cube, as shown in the following picture. We can get the other sides of the face by adding into our

GL_TEXTURE_CUBE_MAP_POSITIVE_X enum.

For a static cubemap, this is very easy. We simply supply the corresponding images into our program, and then load the textures once in our program before rendering. During each rendering call, we pass the cubemap texture into our fragment shader and let it do the heavy lifting for us. In GLSL, we can specify a cubemap texture as so:

uniform samplerCube. We can determine the color of our object's fragment by then calling

textureCube(cubemap, directionVector).

Generating a dynamic cubemap

Creating a static cubemap can create beautiful, stationary scenes, but it needs to be remembered that a dynamic cubemap is one that displays the surrounding environment in real-time. So, we simply cannot load the images into our program to display a moving environment on our reflective or refractive surface. This would take way too much memory and we could not possibly account for all possibilities of a dynamic environment.

The solution to this is to generate the cubemap faces at run-time. This is accomplished by rendering our scene six times before a final render pass, once for each face on the cube. By setting the camera along each positive and negative axis and setting the field of view (FOV) to 90 degrees, we essentially capture what the face sees. We render these images into a framebuffer object, which allows us to generate textures from those images.

Once we've rendered the six textures in each direction successfully, we can combine them into a cubemap texture and then sample them in the final pass of our fragment shader, which generates a relatively realistic looking reflective or refractive sphere.