PanoDreamer: Optimization-Based Single Image to 360 3D Scene With Diffusion

TL;DR: Create 360° 3D scenes from a single image.

Abstract

In this paper, we present PanoDreamer, a novel method for producing a coherent 360° 3D scene from a single input image. Unlike existing methods that generate the scene sequentially, we frame the problem as single-image panorama and depth estimation. Once the coherent panoramic image and its corresponding depth are obtained, the scene can be reconstructed by inpainting the small occluded regions and projecting them into 3D space. Our key contribution is formulating single-image panorama and depth estimation as two optimization tasks and introducing alternating minimization strategies to effectively solve their objectives. We demonstrate that our approach outperforms existing techniques in single-image 360° scene reconstruction in terms of consistency and overall quality.

We introduce a novel method for 360° 3D scene synthesis from a single image. Our approach generates a panorama and its corresponding depth in a coherent manner, addressing limitations in existing state-of-the-art methods such as LucidDreamer and WonderJourney. These methods sequentially add details by following a generation trajectory, often resulting in visible seams when looping back to the input image. In contrast, our approach ensures consistency throughout the entire 360° scene, as shown. The yellow bars show the regions corresponding to the input in each result.

Single-Image Panorama Generation

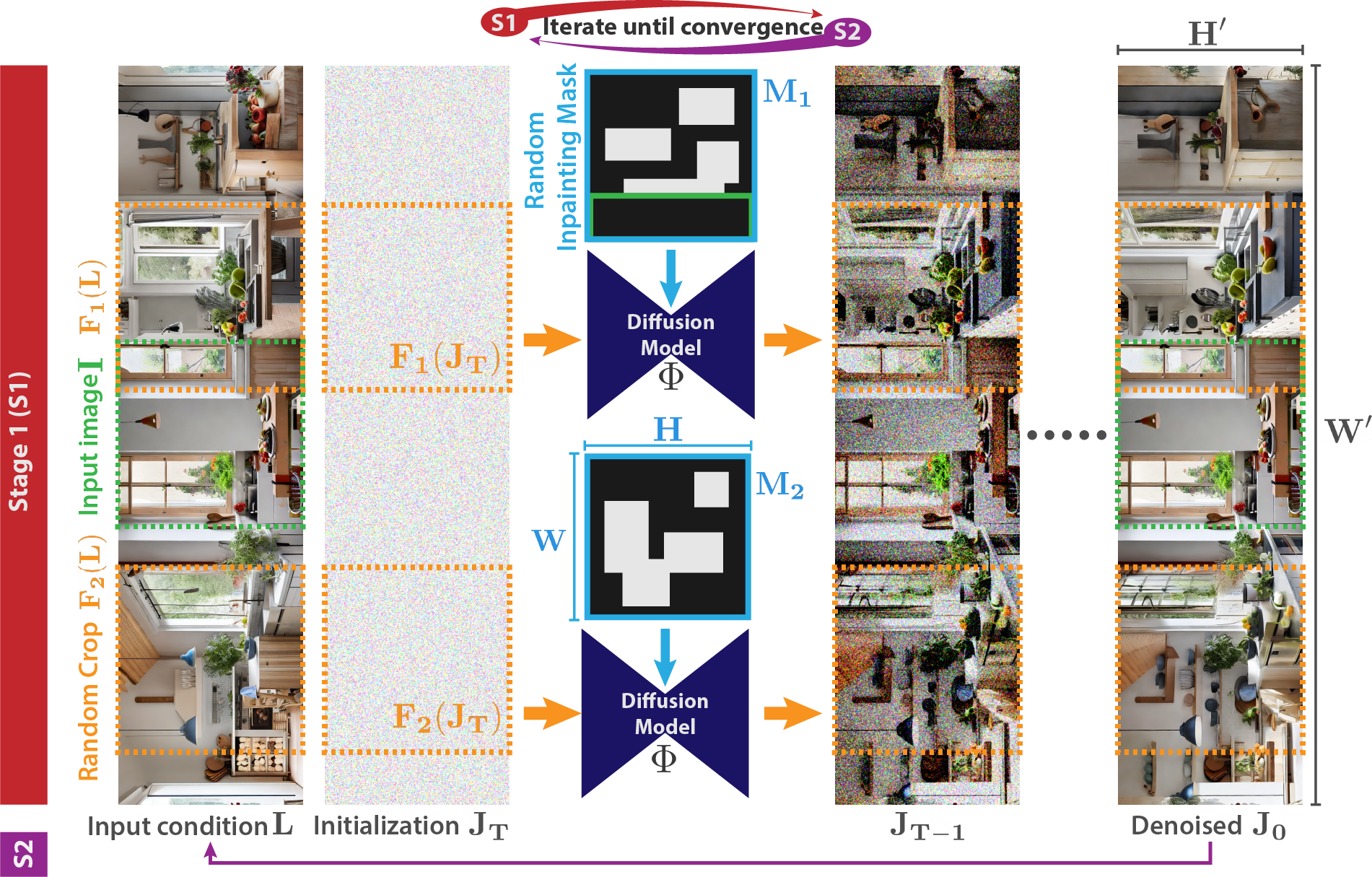

We address the problem of single-image panorama generation using an inpainting diffusion model, framing it as an optimization task solved through an alternating minimization strategy. In the first stage, we fix the input condition L and apply the diffusion model to overlapping crops of the image at the current time step. The outputs are then aggregated to produce the image at the next time step. This process is repeated until the fully denoised image J0 is obtained. In the next stage, we replace the current input condition with J0. These two stages are repeated until convergence. During the iterative process, the input texture at the center is progressively propagated outward.

Wide-Image Comparisons to MultiDiffusion

Hover your mouse over the image to move the slider between our MultiConDiffusion (right) and MultiDiffusion (left) outputs.

Panorama Depth Estimation

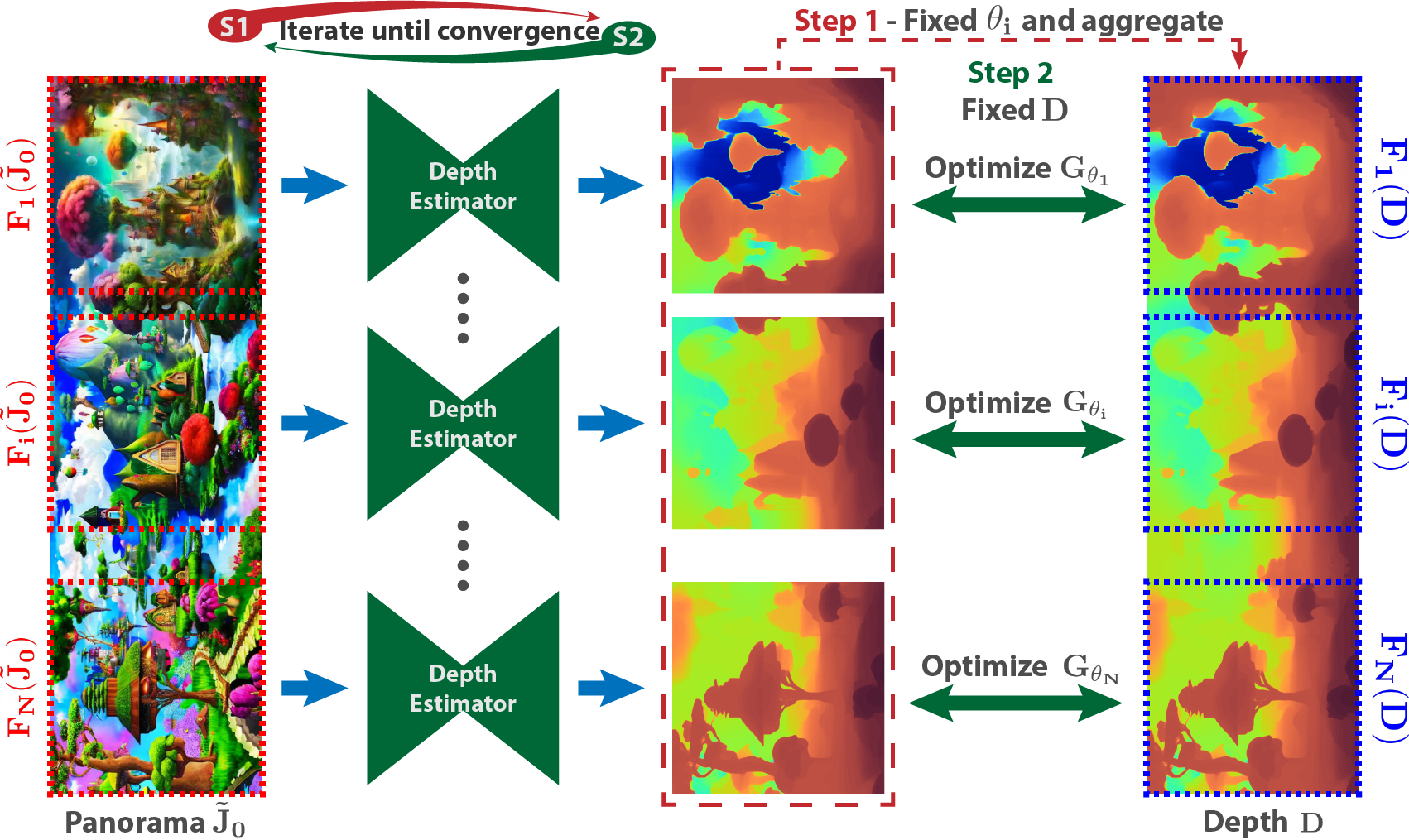

Similar to panorama generation, we use alternating minimization to align overlapping monocular depth map patches for the cylindrical panorama, enabling the estimation of a consistent 360° depth map. We first apply an existing depth estimator to the overlapping patches of the input image to obtain a set of patch depth estimates. We then perform optimization in two stages. In the first stage, the depth patches are adjusted using a piecewise linear function Gθi, and the adjusted patches are then aggregated to obtain the panoramic depth. In the second stage, we optimize the parameters θi of the parametric functions to match the adjusted patch depth estimates with the corresponding regions in the panoramic depth. These two steps are repeated until convergence.

3D Scene Comparisons to other Methods (3DGS)

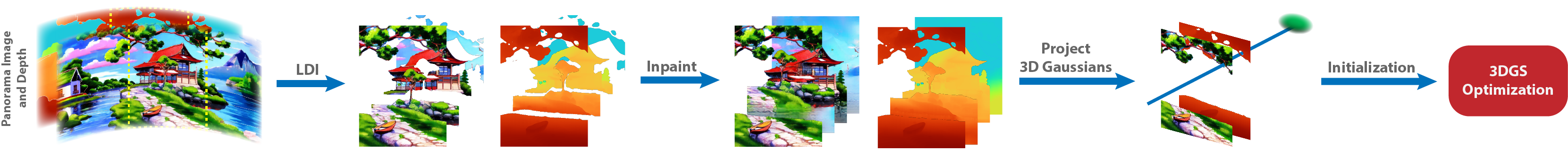

Given a cylindrical panorama and its corresponding depth, we first convert them to the LDI representation. We then inpaint both the image and depth layers. Note that while all images and depth maps are cylindrical, we show only a small crop for clarity. Next, we initialize the Gaussians by assigning a single Gaussian to each pixel and projecting them into 3D space. Finally, we perform 3DGS optimization to obtain the 3D representation.

Compare the renders of our method PanoDreamer (right) with baseline methods (left). Try selecting different methods and scenes!

Walkthrough

Acknowledgements

We thank Pedro Figueirêdo for proofreading the draft. The project was funded by Leia Inc. (contract #415290). Portions of this research were conducted with the advanced computing resources provided by Texas A&M High Performance Research Computing.